1. Quick start

1.1. On Kubernetes

You can install Ontrack using its Helm chart:

helm repo add ontrack https://nemerosa.github.io/ontrack-chartTo install the ontrack chart:

helm install ontrack ontrack/ontrackTo uninstall the chart:

helm delete ontrackThis installs 4 services:

-

Ontrack itself

-

a Postgres 15 database

-

an Elasticsearch 7 single node

-

a RabbitMQ message broker

|

To connect to Ontrack, enable the ingress or activate a port forward. |

See https://github.com/nemerosa/ontrack-chart for more options.

1.2. With Docker Compose

On a local machine, you can start Ontrack using Docker Compose:

curl -fsSLO https://raw.githubusercontent.com/nemerosa/ontrack/master/compose/docker-compose.yml

docker compose up -dThis sets up:

-

a Postgres database

-

an ElasticSearch (single node)

-

a RabbitMQ message broker

-

Ontrack running on port 8080

Go to http://localhost:8080 and start using Ontrack.

The initial administrator credentials are admin / admin.

Where to go from there?

-

learn how to feed information into Ontrack

-

learn how to use the Ontrack UI

-

… or to use its Ontrack API

You can also check the following sections:

2. Installation

There are several ways to install Ontrack.

2.1. Docker Compose installation

The fastest way to start Ontrack is to use Docker Compose, but it might not be adapted for a production environment.

The Docker Compose file can be downloaded from:

You can simply run it using:

docker-compose up -dThis starts three services:

-

Ontrack itself at http://localhost:8080

-

a Postgres database

-

an Elasticsearch single node

-

a RabbitMQ message broker

Neither Postgres, Elasticsearch and RabbitMQ are exposed by default, but you can of course edit the Docker Compose file at your convenience.

The version of Ontrack is set by default to 4 (latest 4.x version) but

you can override it using the ONTRACK_VERSION environment variable.

The memory settings and other JVM parameters for Ontrack can be passed

using the JAVA_OPTIONS environment variable, which defaults to

-Xms1024m -Xmx1024m.

Other Ontrack configuration properties must be passed through environment variables.

Three named Docker volumes are created for the data to be persisted:

-

ontrack_postgres -

ontrack_elasticsearch -

ontrack_data

For other volume configuration, please edit the Docker Compose file.

2.2. Docker installation

The Ontrack Docker image is available in the Docker Hub at https://hub.docker.com/r/nemerosa/ontrack.

Each specific version is available and also a "latest" version per major and minor version. For example:

-

4

-

4.0

-

4.0.0

To run Ontrack, you need to make sure that the minimal dependencies are available:

-

Postgres

-

Elasticsearch

-

RabbitMQ

| See Installation dependencies for details. |

You can then run Ontrack using:

docker container run \

--detach \

--publish 8080:8080 \

-e SPRING_DATASOURCE_URL=<Postgres Ontrack DB JDBC URL> \

-e SPRING_DATASOURCE_USERNAME=<Postgres Ontrack DB Username> \

-e SPRING_DATASOURCE_PASSWORD=<Postgres Ontrack DB Password> \

-e SPRING_ELASTICSEARCH_URIS=<Elasticsearch URL> \

-e SPRING_RABBITMQ_HOST=<RabbitMQ Host>

-e SPRING_RABBITMQ_USERNAME=<RabbitMQ Username>

-e SPRING_RABBITMQ_PASSWORD=<RabbitMQ Password>

nemerosa/ontrack:4The memory settings and other JVM parameters for Ontrack can be passed using the JAVA_OPTIONS environment variable.

Other Ontrack configuration properties must be passed through environment variables.

Optionally, a volume can be mapped to the Ontrack /var/ontrack/data Docker volume. This is particularly needed when using a secret storage based on the file system (see Setup).

2.3. Helm installation

You can install Ontrack into a Kubernetes cluster using Helm:

helm repo add ontrack https://nemerosa.github.io/ontrack-chart

helm install my-ontrack-release ontrack/ontrackThis installs 4 services:

-

Ontrack itself

-

a Postgres 11 database

-

an Elasticsearch 7 single node

-

a RabbitMQ message broker

To connect to Ontrack, enable the ingress or activate a port forward.

For more options and documentation, please check the chart repository at https://github.com/nemerosa/ontrack-chart

2.4. Package installation

Ontrack provides installation packages for Debian & CentOS. Both packages can be downloaded in the release page in GitHub: https://github.com/nemerosa/ontrack/releases.

To run Ontrack, you need to make sure that the minimal dependencies are available:

-

Postgres

-

Elasticsearch

-

RabbitMQ

2.4.1. RPM installation

To install Ontrack using RPM:

rpm -i ontrack.rpmThe following directories are created:

| Directory | Description |

|---|---|

|

Binaries and scripts |

|

Working and configuration directory |

|

Logging directory |

You can optionally create an application.yml configuration file in

/usr/lib/ontrack. For example, to customise the port Ontrack is running on:

server:

port: 9080Ontrack is installed as a service using /etc/init.d/ontrack.

# Starting Ontrack

sudo service ontrack start

# Status of Ontrack

sudo service ontrack status

# Stopping Ontrack

sudo service ontrack stopTo upgrade Ontrack:

# Stopping Ontrack

sudo service ontrack stop

# Updating

sudo rpm --upgrade ontrack.rpm

# Starting Ontrack

sudo service ontrack startThe optional /etc/default/ontrack file can be used to define

environment variables like

JAVA_OPTIONS or SPRING_DATASOURCE_URL.

For example:

JAVA_OPTIONS=-Xmx2048m

SPRING_DATASOURCE_URL=jdbc:postgres://pg/ontrackOther Ontrack configuration properties can be passed the same way.

2.4.2. Debian installation

To install Ontrack using Debian:

dpkg -i ontrack.debThe following directories are created:

| Directory | Description |

|---|---|

|

Binaries and scripts |

|

Working and configuration directory |

|

Logging directory |

Ontrack is installed as a service using /etc/init.d/ontrack.

# Starting Ontrack

sudo service ontrack start

# Status of Ontrack

sudo service ontrack status

# Stopping Ontrack

sudo service ontrack stopThe optional /etc/default/ontrack file can be used to define

environment variables like

JAVA_OPTIONS or SPRING_DATASOURCE_URL.

For example:

JAVA_OPTIONS=-Xmx2048m

SPRING_DATASOURCE_URL=jdbc:postgres://pg/ontrackOther Ontrack configuration properties can be passed the same way.

2.5. JAR installation

Ontrack can be downloaded as a JAR and started as a JVM application.

To run Ontrack, you need to make sure that the minimal dependencies are available:

-

Postgres

-

Elasticsearch

-

RabbitMQ

|

You need a JDK 11 or better to run Ontrack. |

Download the JAR from the Ontrack release page.

Start it using:

java -jar ontrack.jarOptions can be passed on the command line, either:

-

using system properties:

-Dspring.datasource.url=...-

or environment variables:

SPRING_DATASOURCE_URL=...2.6. Installation dependencies

Ontrack relies on the following components to be available:

-

Postgres - for storage of information

-

Elasticsearch - for search indexation

-

RabbitMQ - for asynchronous processing

2.6.1. Postgres

Versions 9.5.+ to version 11.+ of Ontrack have been tested.

By default, Ontrack will use the following configuration properties and their default values to connect to Postgres:

| Property | Env variable | Description | Default value |

|---|---|---|---|

spring.datasource.url |

SPRING_DATASOURCE_URL |

JDBC URL to the Postgres Ontrack DB |

|

spring.datasource.username |

SPRING_DATASOURCE_USERNAME |

Username used to connect to the Postgres Ontrack DB |

|

spring.datasource.password |

SPRING_DATASOURCE_PASSWORD |

Password used to connect to the Postgres Ontrack DB |

|

Other properties are available in Spring Boot.

2.6.2. Elasticsearch

Version 7.5.+ has been tested.

By default, Ontrack will use the following configuration properties and their default values to connect to ElasticSearch:

| Property | Env variable | Description | Default value |

|---|---|---|---|

spring.elasticsearch.uris |

SPRING_ELASTICSEARCH_URIS |

REST URI of Elasticsearch |

Other properties are available in Spring Boot.

2.6.3. RabbitMQ

Version 3.8.+ has been tested.

By default, Ontrack will use the following configuration properties and their default values to connect to Postgres:

| Property | Env variable | Description | Default value |

|---|---|---|---|

spring.rabbitmq.host |

SPRING_RABBITMQ_HOST |

RabbitMQ host name |

|

spring.rabbitmq.username |

SPRING_RABBITMQ_USERNAME |

RabbitMQ user name |

|

spring.rabbitmq.password |

SPRING_RABBITMQ_PASSWORD |

RabbitMQ password |

|

Other properties are available in Spring Boot.

3. Setup

While Ontrack can be configured using the UI, it’s recommended to use the Configuration as Code (CasC) feature.

3.1. Configuration as Code

Ontrack supports to be configured as code by default.

It uses a set of YAML resources defined as comma-separated list of locations by the ontrack.config.casc.locations configuration property.

For example, when using the environment variables:

ONTRACK_CONFIG_CASC_LOCATIONS=file:/path/to/file.yaml,https://path.com/fileAll YAML resources defined by those locations are merged together according to the following rules:

-

right-most files take precedence for single values

-

arrays are always concatenated to each other

The list of locations can contain path to folders on a file system. In this case, Ontrack will use all the files in this folder.

3.1.1. [experimental] Casc secrets

Secrets can be injected into Casc file using this syntax:

{{ secret.<base>.<name> }}For example:

some-secret-field: {{ secret.my-secret.my-property }}By default, this is interpolated and evaluated using the SECRET_<BASE>_<NAME> environment variable, SECRET_MY_SECRET_MY_PROPERTY for the example above.

If this environment variable value is my-password, the final Casc file will be:

some-secret-field: my-passwordAlternatively, the secrets can be mapped to files by settings the ontrack.config.casc.secrets.type configuration property to file and the ontrack.config.casc.secrets.directory one to a valid directory.

Ontrack will then look for the secrets in files called <base>/<name>.

In the example above, the value of the {{ secret.my-secret.my-property }} expression will be looked for in the <directory>/my-secret/my-property file.

|

The |

3.1.2. Casc schema

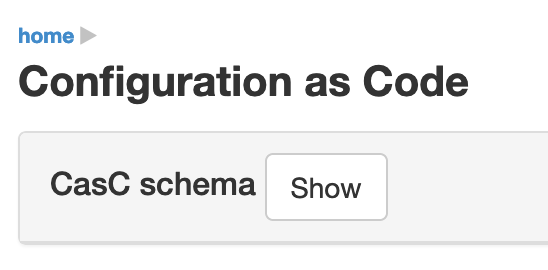

All those files must comply with the Ontrack CasC format. This schema is available in the UI in the user menu at Configuration as code, and by clicking on the Show button right of the CasC schema section:

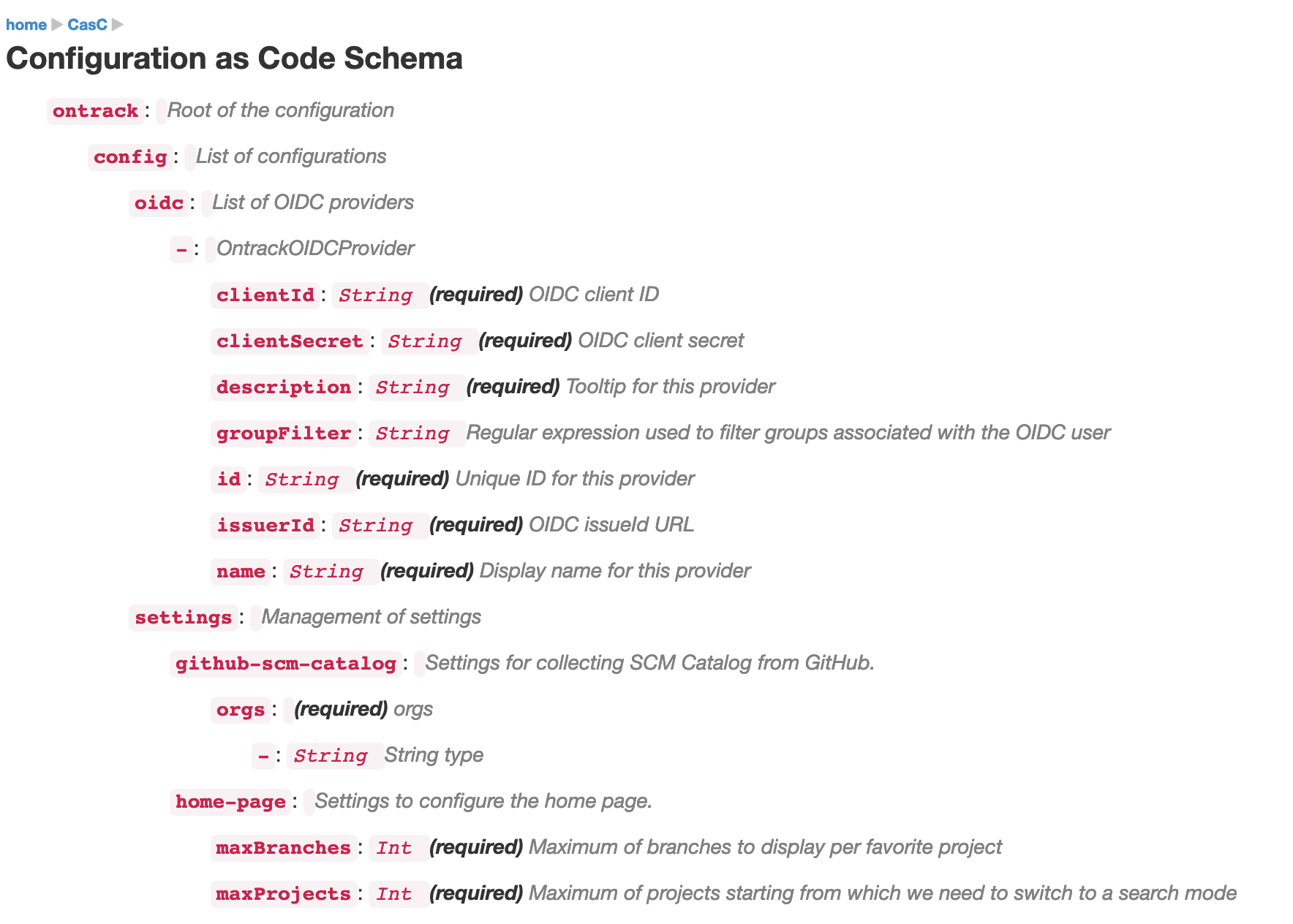

This goes to the page at http://localhost:8080/#/extension/casc/casc-schema :

3.1.3. Examples

To configure the security settings so that all authenticated users have access to all the projects and can participate in all of them:

ontrack:

config:

settings:

security:

grantProjectViewToAll: true

grantProjectParticipationToAll: trueTo add an OIDC provider (Okta for example):

ontrack:

config:

oidc:

- id: okta

name: My Okta

description: The Okta account used by my company

issueId: https://<okta domain>.okta.com/oauth2/default

clientId: <Client ID of the application in Okta>

clientSecret: <Client secret of the application in Okta>

groupFilter: ontrack-.*To add a GitHub configuration based on an OAuth2 token:

ontrack:

config:

github:

- name: GitHub

token: <your secret token>3.1.4. Controls

The Configuration as code configuration page is available at:

-

user menu > Configuration as code

-

directly at http://localhost:8080/#/extension/casc/casc-schema

On this page, you can:

-

display the schema

-

see the list of locations where Ontrack fetches its CasC YAML resources

-

reload the configuration as code

-

display the current configuration as YAML (handy when migrating an existing installation to CasC)

Reloading the configuration as code can be done from the UI as mentioned above but also:

-

through a

PUTREST call at/extension/casc/reload:

curl -X PUT --user admin <ontrack>/extension/casc/reload-

through the following GraphQL mutation:

mutation {

reloadCasc {

errors {

message

}

}

}3.1.5. Upload

The default Casc setup relies on files (or URL) available from the Ontrack application and in a SaaS context, these may not be available or even configurable.

A CasC upload endpoint can be enabled to allow users to upload their own Casc configuration, by using the ontrack.config.casc.upload.enabled configuration property or ONTRACK_CONFIG_CASC_UPLOAD_ENABLED environment property.

When done, it becomes possible to upload a YAML file, which is will be picked up next time the Casc is reloaded.

For example, given a casc.yaml file:

curl --user $USER:$TOKEN \

$URL/extension/casc/upload \

-F "[email protected];type=application/yaml"|

The user must have the Global settings user rights (typically an administrator). |

3.1.6. Using a JSON schema to edit Casc YAML files

You can download a [JSON Schema](https://json-schema.org/) that can be used to edit Casc YAML files.

First, download this schema locally by navigating to System > Configuration as Code. Select Schema and click on JSON Schema.

This offers to download an ontrack-casc-schema.json file: save it locally.

|

The Ontrack Casc JSON schema is versioned using the Ontrack version you download it from. |

To use it for the edition of a Casc YAML file, you can do the following in Intellij IDEA:

-

in the Settings, select Languages & Frameworks > Schema & DTDs > JSON Schema Mappings

-

in Schema file or URL, click on the folder icon and select the downloaded

ontrack-casc-schema.jsonfile -

apply and save the settings

Open a YAML file.

To associate it with the Ontrack Casc schema, click on the Schema component in the bottom right corner of the file and select ontrack-casc.

You should now have auto-completion and validation.

4. Authentication

Ontrack supports the following authentication backends:

|

Ontrack is able to work with multiple sources of authentication. |

4.1. Built-in authentication

Ontrack comes with its own registry to store accounts. In particular, it contains the built-in admin user.

No configuration is needed to enable the built-in authentication.

To create and manage accounts, go to your user menu and select Account management.

When user are connected using the built-in authentication mechanism, they are able to change their password using the Change password user menu.

|

Administrators can lock built-in users so they cannot change their password; this is needed to create fixed guest accounts. |

For the management of built-in accounts, see Accounts management.

4.2. LDAP authentication

It is possible to enable authentication using a LDAP instance and to use the LDAP-defined groups to map them against Ontrack groups.

4.2.1. LDAP general setup

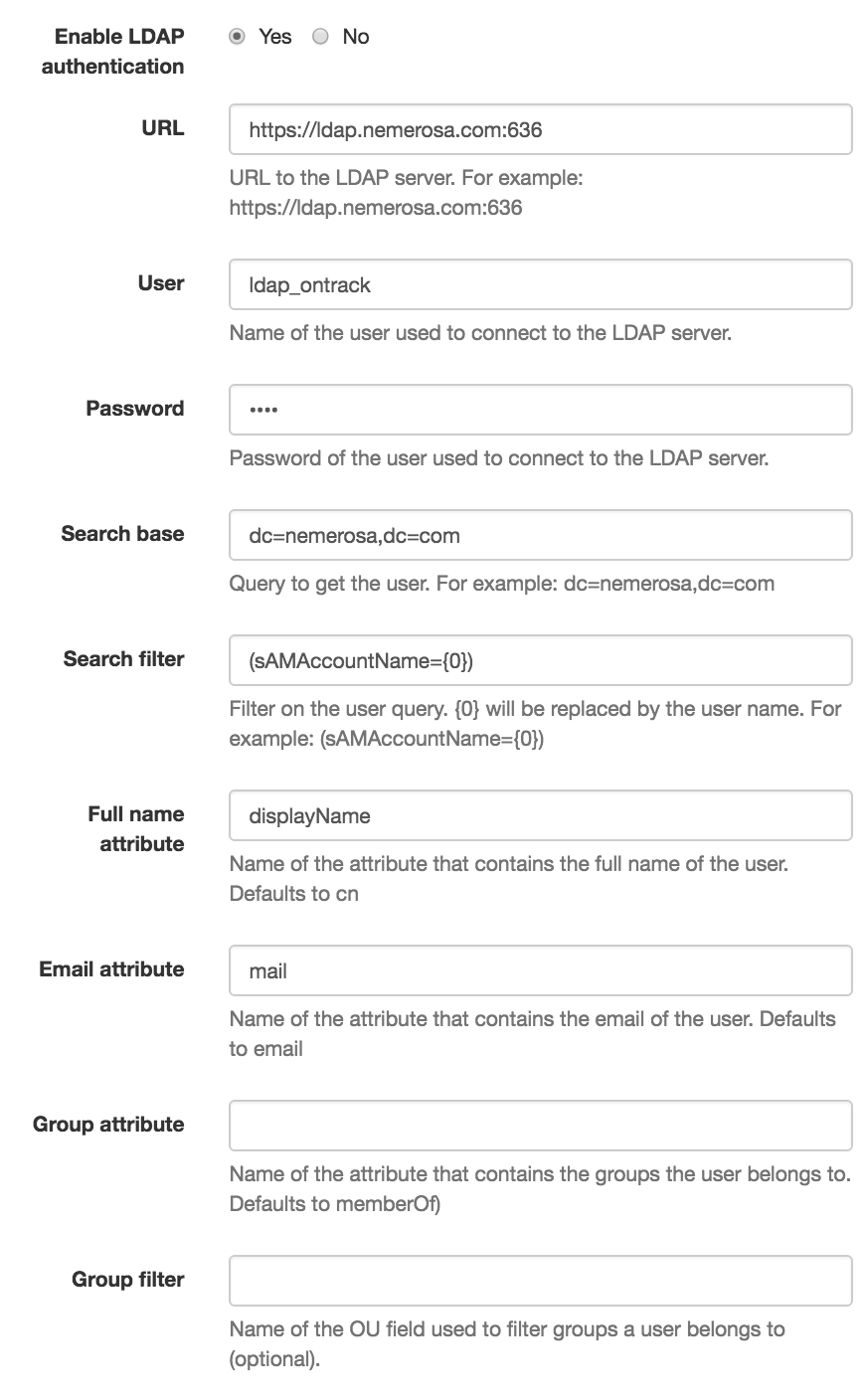

As an administrator, go to the Settings menu. In the LDAP settings section, click on Edit and fill the following parameters:

-

Enable LDAP authentication: Yes

-

URL: URL to your LDAP

-

User and Password: credentials needed to access the LDAP

-

Search base: query to get the user

-

Search filter: filter on the user query

-

Full name attribute: attribute which contains the full name,

cnby default -

Email attribute: attribute which contains the email,

emailby default -

Group attribute: attribute which contains the list of groups a user belongs to,

memberOfby default -

Group filter: optional, name of the OU field used to filter groups a user belongs to

The list of groups (indicated by the memberOf

attribute or any other attribute defined by the Group attribute

property) is not searched recursively and that only the direct groups

are taken into account.

|

For example:

| The settings shown above are suitable to use with an Activate Directory LDAP instance. |

4.2.2. LDAP group mapping

A LDAP group a user belongs to can be used to map onto an Ontrack group.

As an administrator, go to the Account management menu and click on the LDAP mapping command.

| This command is only available if the LDAP authentication has been enabled in the general settings. |

To add a new mapping, click on Create mapping and enter:

-

the name of the LDAP group you want to map

-

the Ontrack group which must be mapped

For example, if you map the ontrack_admin LDAP group to an Administrators group in Ontrack, any user who belongs to ontrack_admin will automatically be assigned to the Administrators group when connecting.

| This assignment based on mapping is dynamic only, and no information is stored about it in Ontrack. |

Note that those LDAP mappings can be generated using configuration as code.

Existing mappings can be updated and deleted.

4.3. OpenID authentication

Ontrack supports OpenId identify providers for authentication and group permissions.

4.3.1. Keycloak setup

| Ontrack has been tested with Keycloak 12.0.4. |

Given a Keycloak realm, the Ontrack client can be configured this way:

-

client protocol:

openid-connect -

valid redirect URLs:

<ontrack url>/* -

base URL:

<ontrack url>/login/oauth2/code/<keycloak realm> -

web origins:

<ontrack url>

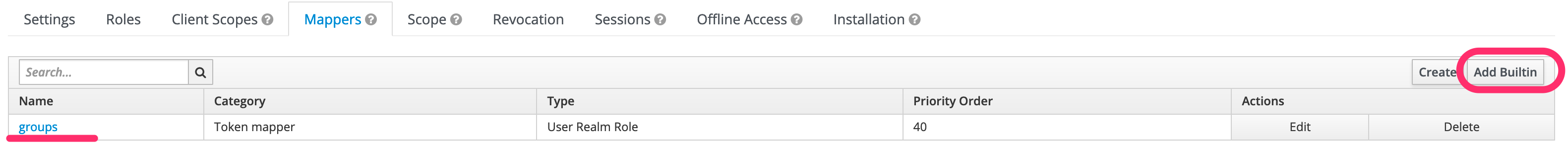

If you want to use Keycloak for group mappings in Ontrack, go to Mappers and add the built-in "groups" mapper:

On the Ontrack side, as an administrator:

-

navigate to the OIDC providers menu

-

click on "Create provider"

-

add the following information:

-

ID: unique ID for your provider. It must be aligned with the name of the Keycloak realm (see Keycloak configuration above)

-

Name: a display name, which will be used on the login page

-

Description: used as a tooltip on the login page

-

Issuer ID:

<keycloak url>/auth/realms/<keycloak realm> -

Client ID: ID of the client in Keycloak

-

Client secret: can be left blank for Keycloak

-

Group filter: regular expression to filter the group list sent by Keycloak in the

groupsclaim

-

If Ontrack runs behind a SSL termination proxy and if the HTTP headers are not all forwarded, the "Force HTTPS" option can be set to true in order to force the redirect URI to use HTTPS.

|

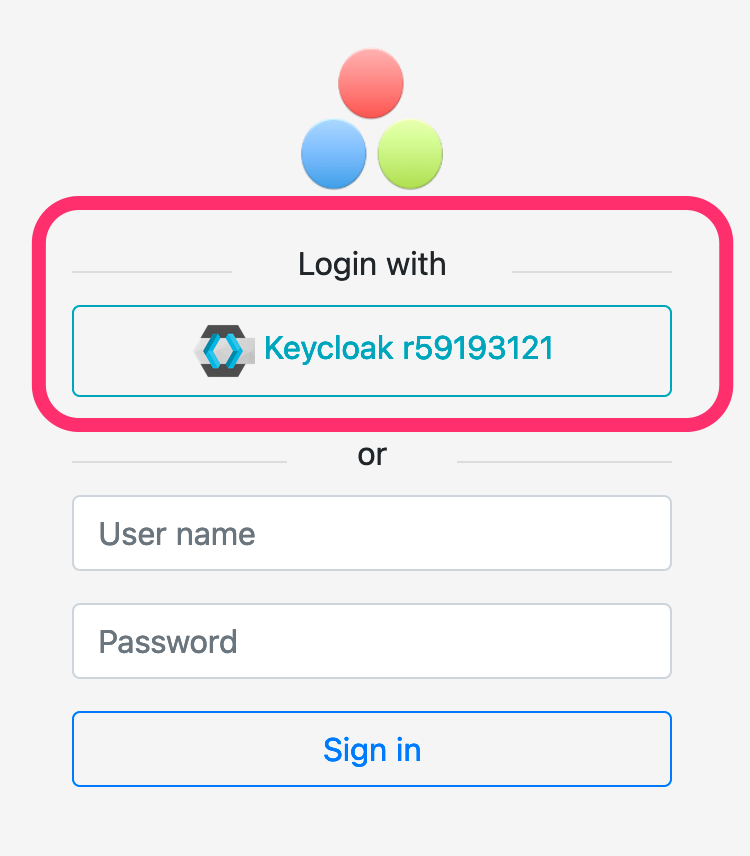

In the OIDC provider list, you can optionally set a picture for this configuration. This picture will be used on the login page. For example:

When the users click on the button, they will be redirected to Keycloak for authentication.

Upon a first connection, an account will be created automatically on Ontrack, based on the information returned by Keycloak.

|

Keycloak is better configured using Configuration as Code. See some examples here. |

4.3.2. Okta setup

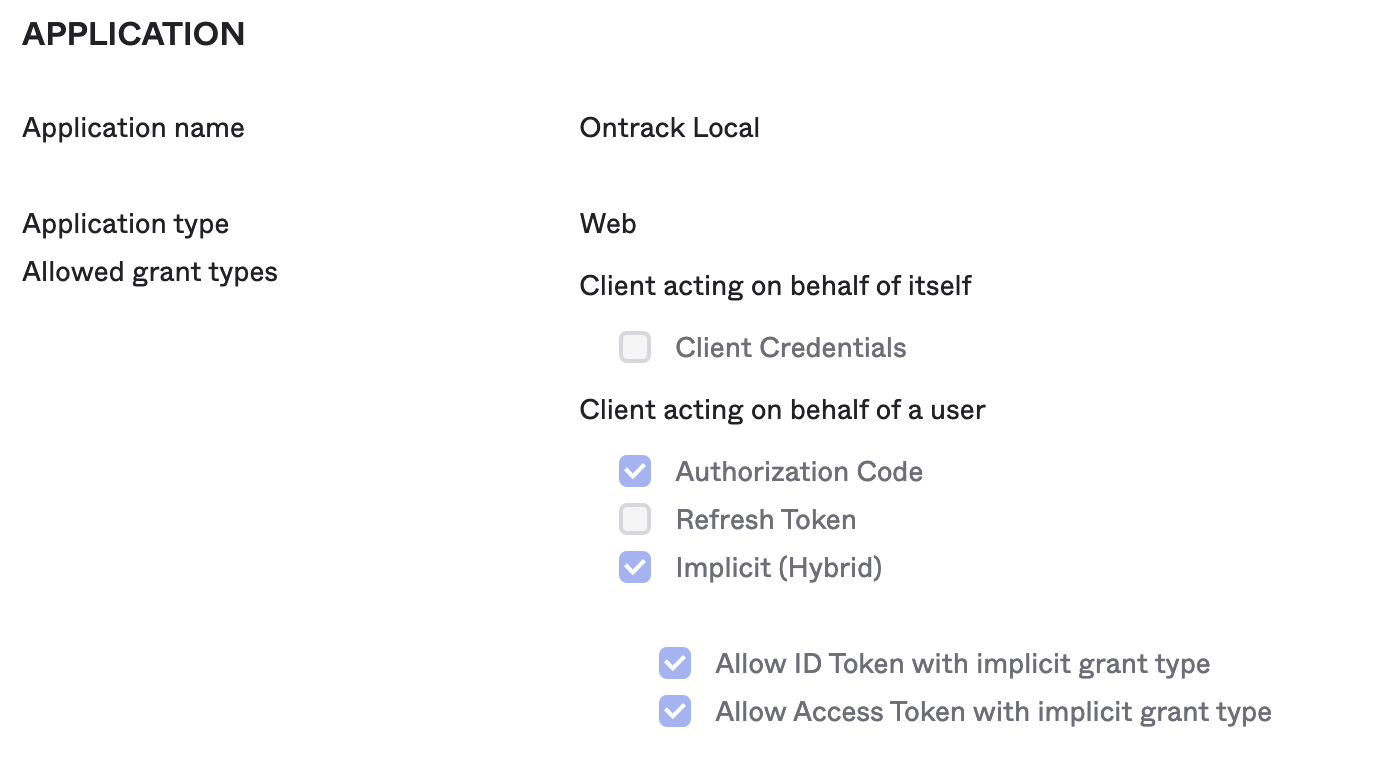

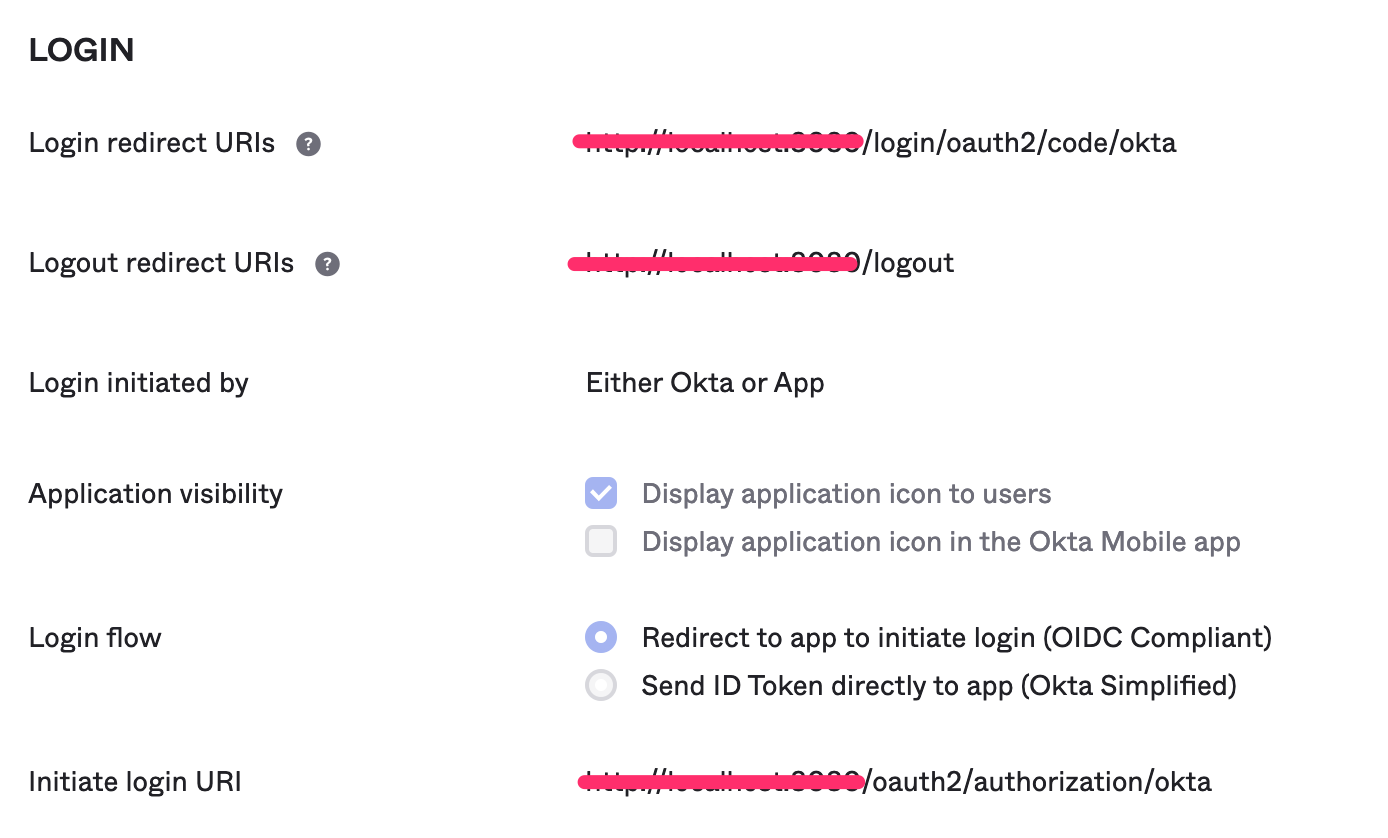

In Okta, an Ontrack application must be configured with the following parameters:

-

application type:

Web -

Allowed grant types:

-

Client acting on behalf of a user:

-

Authorization code

ON -

Implicit (hyprid)

-

Allow ID Token with implicit grant type

ON -

Allow Access Token with implicit grant type

ON

-

-

-

-

Login redirect URIs:

<ontrack url>/login/oauth2/code/okta -

Logout redirect URIs:

<ontrack url>/logout -

Login initiated by:

Either Okta or App -

Application visibility:

-

Display application icon to users

ON

-

-

Login flow:

-

Redirect to app to initiate login (OIDC Compliant)

ON

-

-

Initiate login URI:

<ontrack url>/oauth2/authorization/okta

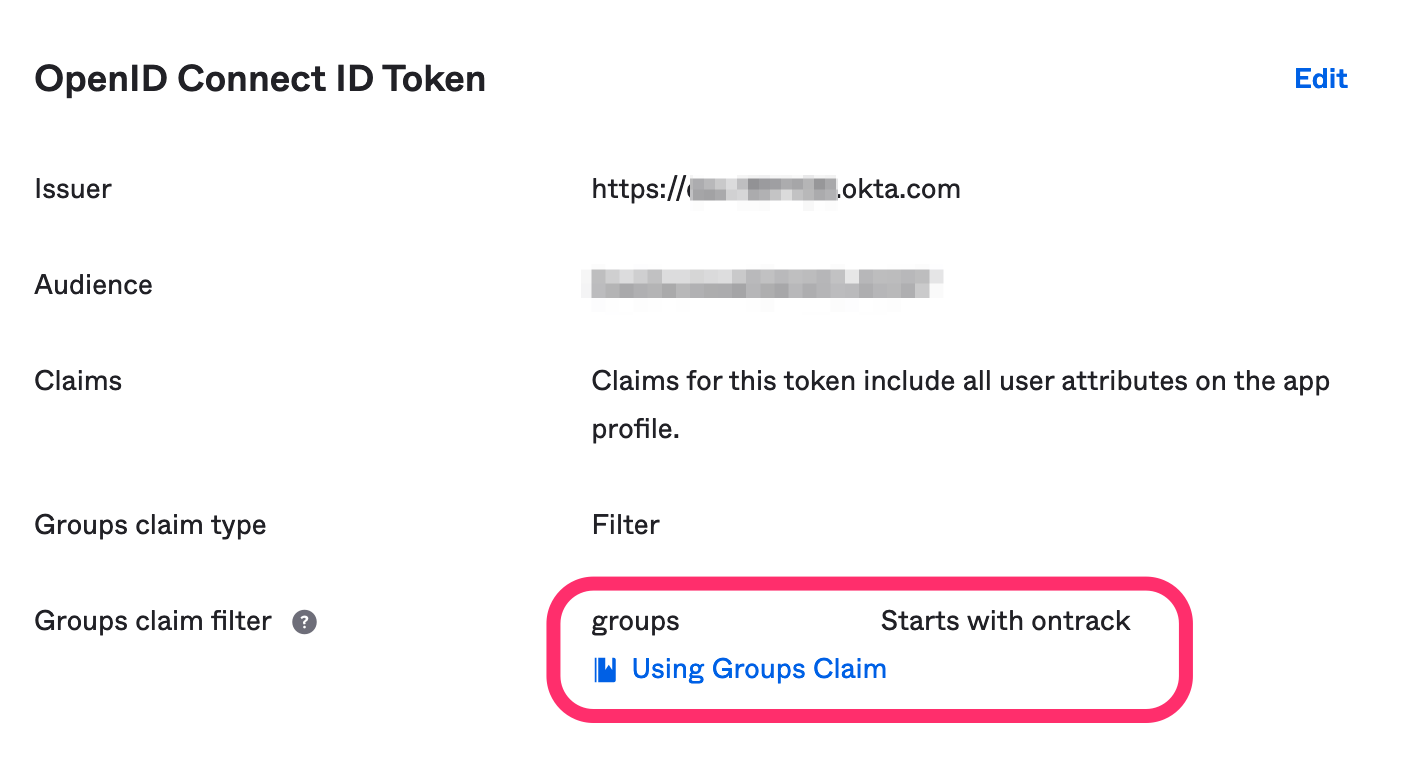

If you want to use Okta groups in the group mappings in Ontrack, go to Sign On section of the application and make sure to select a list of groups (using a filter):

In this example, we select all groups whose name starts with ontrack.

On the Ontrack side, as an administrator:

-

navigate to the OIDC providers menu

-

click on "Create provider"

-

add the following information:

-

ID: unique ID for your provider, typically

okta -

Name: a display name, which will be used on the login page

-

Description: used as a tooltip on the login page

-

Issuer ID:

https://<okta domain>.okta.com/oauth2/default -

Client ID of the application in Okta

-

Client secret of the application in Okta

-

Group filter: regular expression to filter the group list sent by Okta in the

groupsclaim

-

If Ontrack runs behind a SSL termination proxy and if the HTTP headers are not all forwarded, the "Force HTTPS" option can be set to true in order to force the redirect URI to use HTTPS.

|

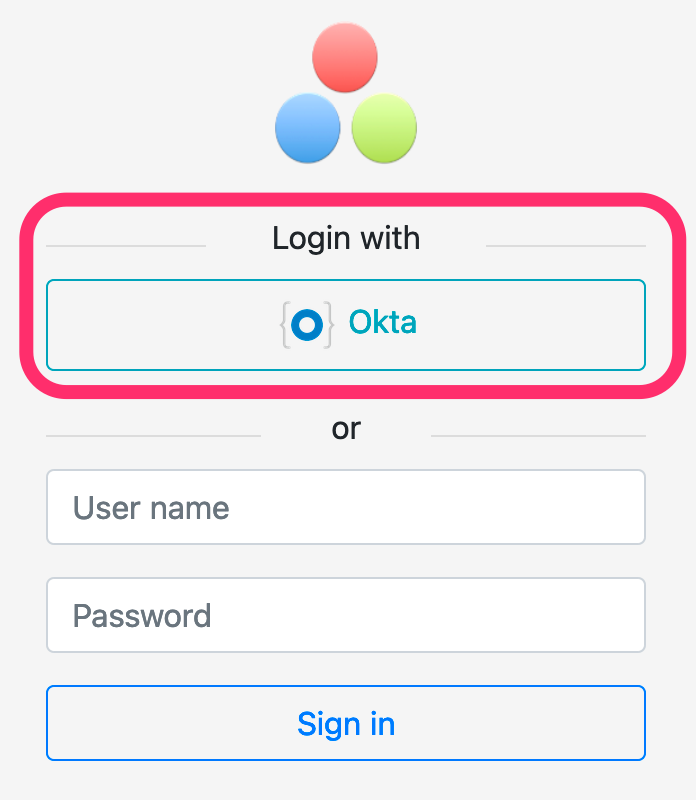

In the OIDC provider list, you can optionally set a picture for this configuration. This picture will be used on the login page. For example:

When the users click on the button, they will be redirected to Okta for authentication.

Upon a first connection, an account will be created automatically on Ontrack, based on the information returned by Okta.

|

Okta is better configured using Configuration as Code. See some examples here. |

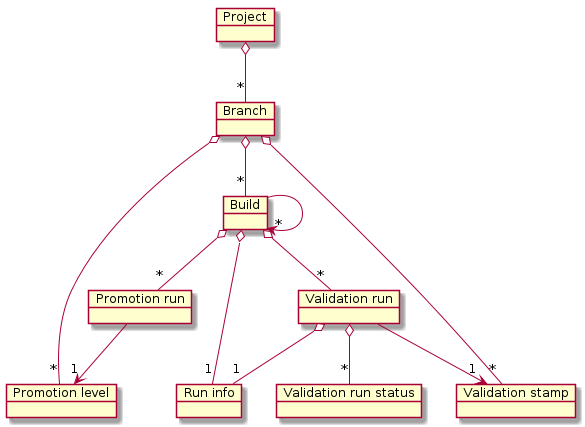

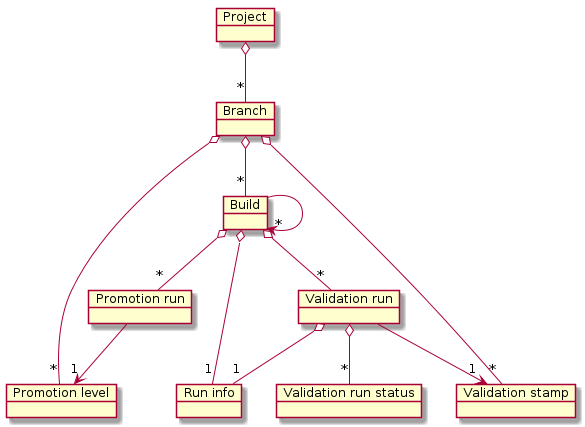

5. Concepts

The root entity in Ontrack is the project.

Several branches can be attached to a project. Builds can be created within a branch and linked to other builds (same or other branches).

Promotion levels and validation stamps are attached to a branch:

-

a promotion level is used to define the promotion a given build has reached. A promotion run defines this association.

-

a validation stamp is used to qualify some tests or other validations on a build. A validation run defines this association. There can be several runs per build and per validation stamp. A run itself has a sequence of statuses attached to it: passed, failed, investigated, etc.

Builds and validation runs can be attached to some "run info" which gives additional information like the duration of the build or the validation.

Branches, promotion levels and validation stamps define the static structure of a project.

6. Security

The Ontrack security is based on accounts and account groups, and on authorizations granted to them.

6.1. Concepts

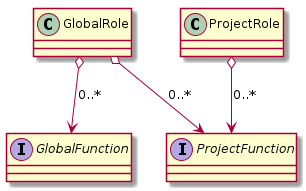

Each action in Ontrack is associated with an authorisation function and those functions are grouped together in roles which are granted to accounts and account groups.

An account can belong to several account groups and his set of final authorisation functions will be the aggregation of the rights given to the account and to the groups.

See Accounts management to manage accounts and groups.

6.1.1. Roles

| As of now, only roles can be assigned to groups and accounts, and the list of roles and their associated functions is defined by Ontrack itself. |

Ontrack distinguishes between global roles and project roles.

Extensions can contribute to built-in roles and functions - see Extending the security for details.

6.1.2. Global roles

An ADMINISTRATOR has access to all the functions of Ontrack, in all projects. At least such a role should be defined.

By default, right after installation, a default admin account is

created with the ADMINISTRATOR role, having admin as password. This

password should be changed as soon as possible.

|

A CREATOR can create any project and can, on all projects, configure them, create branches, create promotion levels and validation stamps. This role should be attributed to service users in charge of automating the definition of projects and branches.

An AUTOMATION user can do the same things than a CREATOR but can, on all projects, additionally edit promotion levels and validation stamps, create builds, promote and validate them, manage account groups and project permissions. This role is suited for build and integration automation (CI).

A CONTROLLER can, on all projects, create builds, promote and validate them. It is suited for a basic CI need when the Ontrack structure already exists and does not need to be created.

A GLOBAL VALIDATION MANAGER can manage validation stamps across all projects.

A PARTICIPANT can view all projects, and can add comments to all validation runs.

A READ_ONLY can view all projects, but cannot perform any action on them.

The global roles can only be assigned by an administrator, in the Account management page, by going to the Global permissions command.

A global permission is created by associating:

-

a permission target (an account or a group)

-

a global role

Creation:

-

type the first letter of the account or the group you want to add a permission for

-

select the account or the group

-

select the role you want to give

-

click on Submit

Global permissions are created or deleted, not updated.

6.1.3. Project roles

A project OWNER can perform all operations on a project but to delete it.

A project PARTICIPANT has the right to see a project and to add comments in the validation runs (comment + status change).

A project VALIDATION_MANAGER can manage the validation stamps and create/edit the validation runs.

A project PROMOTER can create and delete promotion runs, can change the validation runs statuses.

A project PROJECT_MANAGER cumulates the functions of a PROMOTER and of a VALIDATION_MANAGER. He can additionally manage branches (creation / edition / deletion) and the common build filters. He can also assign labels to the project.

A project READ_ONLY user can view this project, but cannot perform any action on it.

Only project owners, automation users and administrators can grant rights in a project.

In the project page, select the Permissions command.

A project permission is created by associating:

-

a permission target (an account or a group)

-

a project role

Creation:

-

type the first letter of the account or the group you want to add a permission for

-

select the account or the group

-

select the role you want to give

-

click on Submit

Project permissions are created or deleted, not updated.

6.1.4. Accounts

Accounts are created:

-

by an administrator in the built-in authentication system, with a password stored and encrypted in Ontrack itself

-

upon login when using external authentication systems like a LDAP or Open ID provider.

6.1.5. Account groups

An administrator can create groups using a name and a description, and assign them a list of global or project roles.

An account can be assigned to several groups.

| If an external authentication system, like a LDAP or Open ID provider, is enabled, the external groups can be mapped to the account groups. |

6.2. General settings

By default, all authenticated users have access to all the projects, in read only mode.

You can disable this global access by going to the Settings and click the Edit button in the General section. There you can set the Grants project view to all option to No.

6.3. Extending the security

Extensions can extend the security model beyond what if defined in the Ontrack core. See Extending the security for more details.

7. Feeding information in Ontrack

Ontrack gathers and structures information which is sent by other tools in a CI/CD ecosystem or collected from them.

Foremost among the tools which will feed information into Ontrack are the CI engines. They can initialize projects and branches, they can create builds, validations and promotions, they can inject meta-information like timings, test results or links between builds.

| Ontrack gathers also information out of ticketing systems, artifact managers or source control systems. This aspect is covered in the Integrations chapter. |

Ontrack provides an API for tools to inject data, but more specialized integrations are provided as well:

-

the Ontrack CLI

-

a set of Ontrack GitHub actions

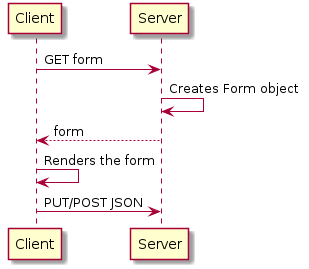

7.1. Using the API

Ontrack provides a GraphQL API to interact with it:

-

queries to get information from Ontrack

-

mutations to inject information from Ontrack

Example: to create a new build for an existing project & branch:

mutation {

createBuild(input: {

projectName: "my-project",

branchName: "my-branch",

name: "1234",

runInfo: {

runTime: 12

}

}) {

build {

id

}

errors {

message

}

}

}See Ontrack GraphQL API for a complete information.

7.2. Ontrack CLI

Instead of using the API directly, you can use the Ontrack CLI, a multi-platform client which wraps the API calls into convenient commands.

For example, to create a new build for an existing project & branch:

ontrack-cli build setup \

--project my-project \

--branch my-branch \

--build 1234See the Ontrack CLI documentation for more information about the installation, configuration & usage of this client.

7.3. Jenkins plug-in

If you’re using Jenkins as a CI engine, you can either use the Ontrack Jenkins plug-in or the Ontrack Jenkins pipeline library.

7.3.1. Jenkins plug-in

The Ontrack Jenkins plug-in relies on API to inject data into Ontrack.

For example, to create a build:

pipeline {

stages {

stage('Build') {

// ...

// Computes the `version` variable

// ...

post {

success {

ontrackBuild(

project: 'my-project',

branch: 'my-branch',

build: version,

)

}

}

}

}

}|

The Ontrack Jenkins plug-in will be deprecated at some point, in favor of using the Ontrack Jenkins pipeline library described below. |

7.3.2. Jenkins pipeline library

The Ontrack Jenkins pipeline library wraps the Ontrack CLI into convenient pipeline steps.

|

To be implemented. As much as possible, the pipeline library will mimic the steps which were provided by the Jenkins plug-in. |

For example, to create a build:

pipeline {

stages {

stage('Build') {

// ...

// Computes the `version` variable

// ...

post {

success {

ontrackBuild(

project: 'my-project',

branch: 'my-branch',

build: version,

)

}

}

}

}

}7.4. GitHub

There are several ways to integrate GitHub Actions workflows with Ontrack:

-

ingestion of workflow data in Ontrack through a GitHub Webhook

-

direct integration using GitHub Actions or the Ontrack CLI

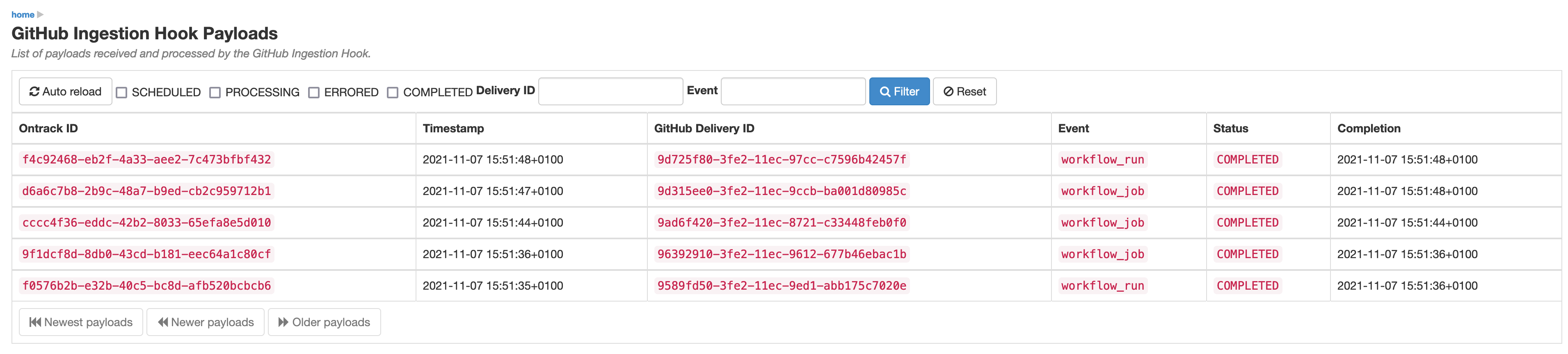

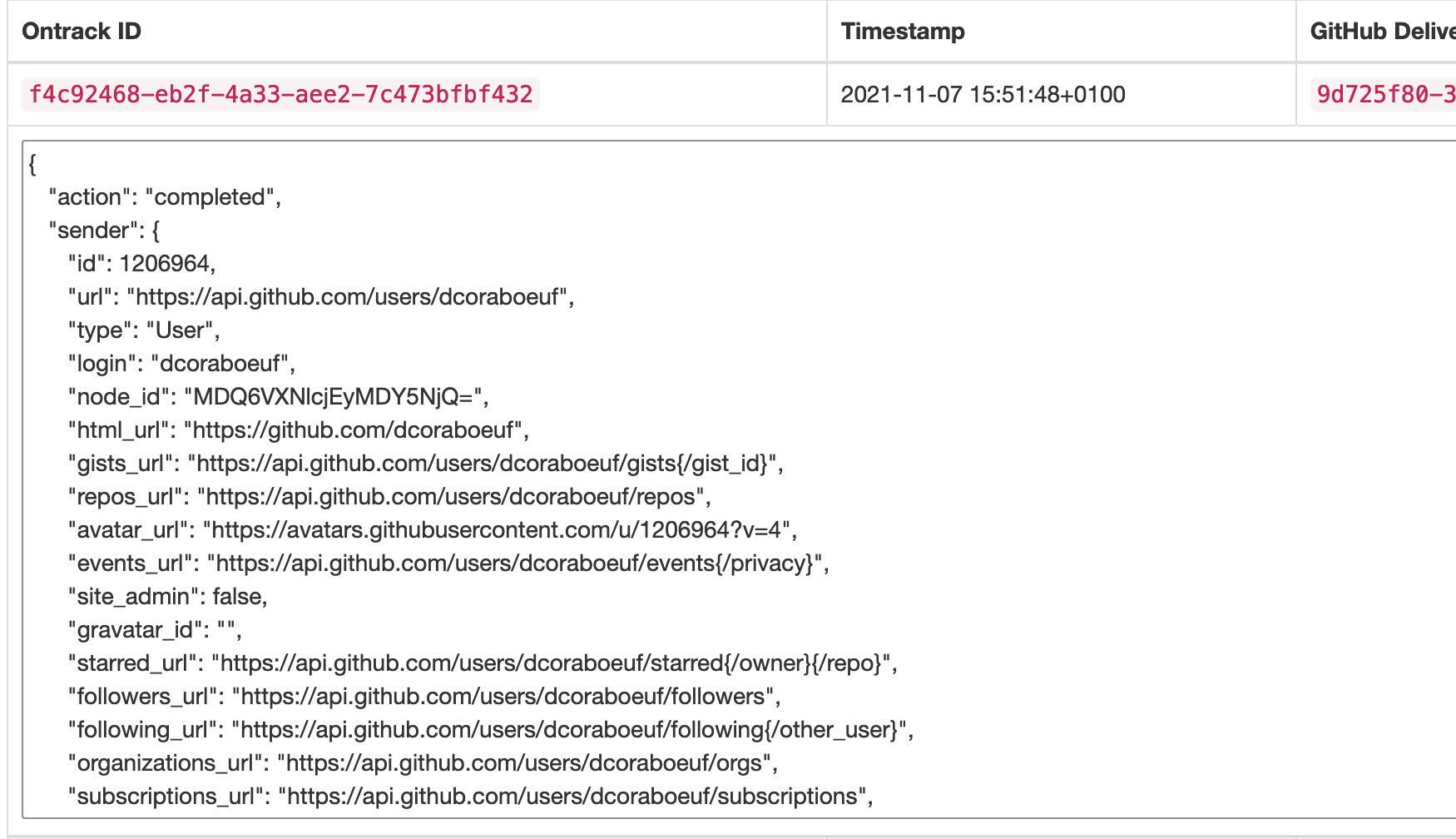

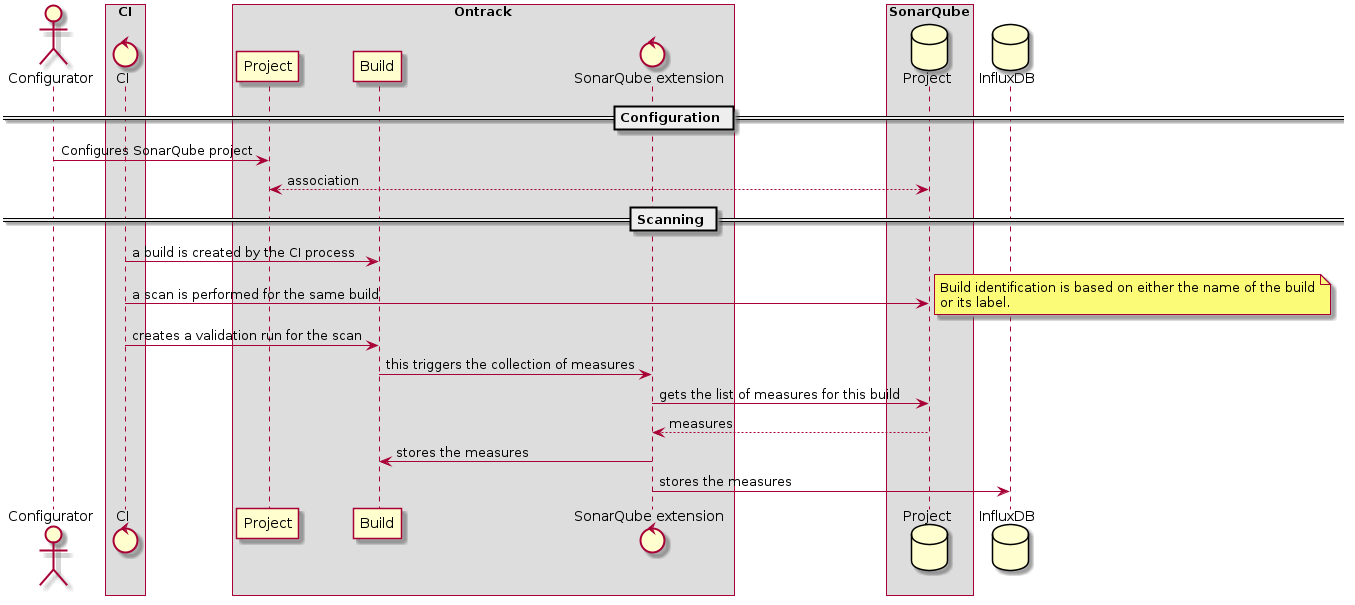

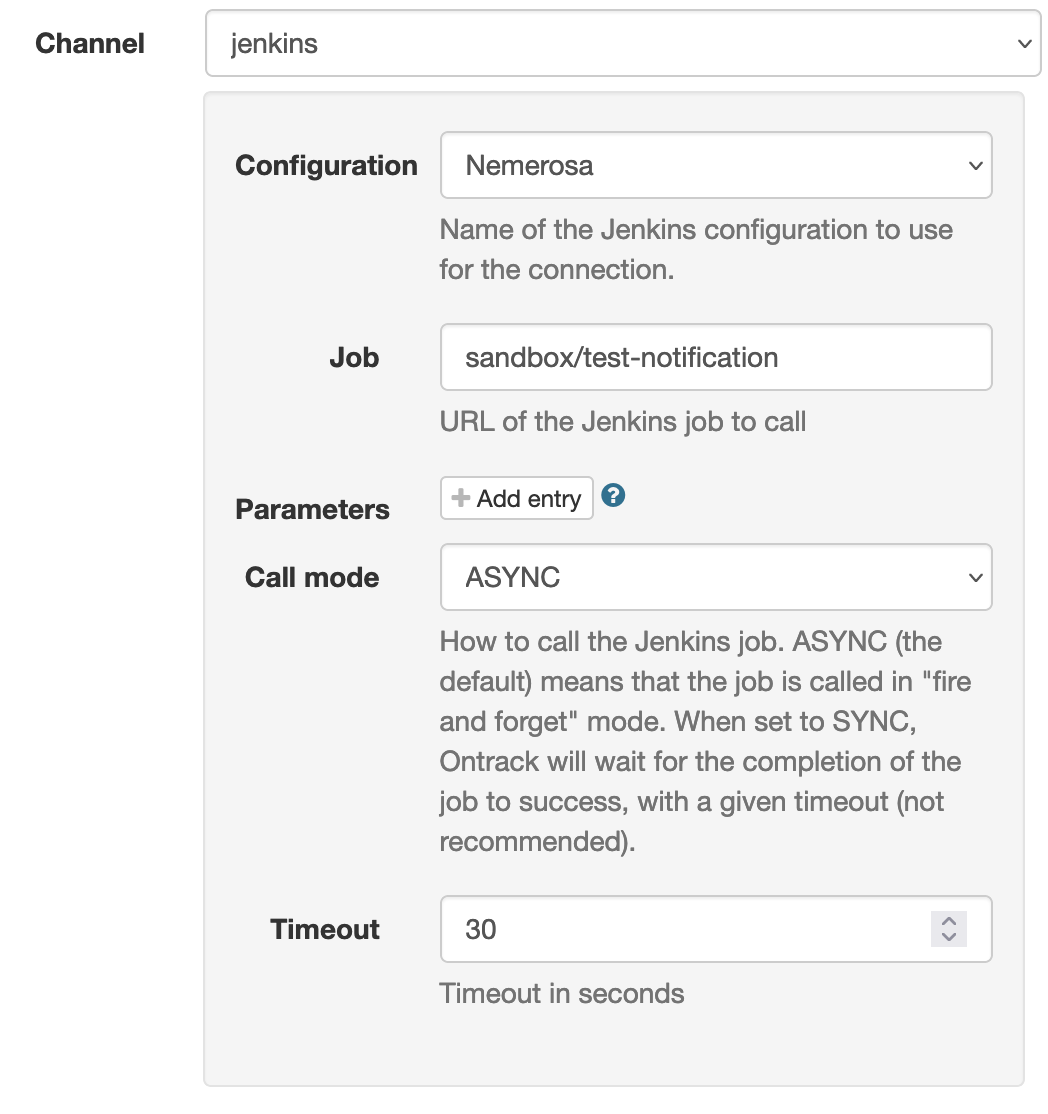

7.4.1. GitHub Ingestion Hook

Integration of Ontrack inside of GitHub workflows is cumbersome and does not feel very natural.

A more seamless way to get GitHub workflows data into Ontrack is to work by ingesting the data directly from the workflow, without even adapting it.

We can do this by registering a webhook at the repository or organization level.

See GitHub Ingestion for the detailed configuration of the hook and all its options.

As a quick start:

-

Generate a unique token randomly (GitHub suggests using

ruby -rsecurerandom -e 'puts SecureRandom.hex(20)'but any other method would do) -

In the repository or organization, register a Webhook:

-

URL -

<ontrack>/hook/secured/github/ingestion -

Content type -

application/json -

Secret - the secret you generated in step (1)

-

Permissions:

-

Workflow jobs

-

Workflow runs

-

Pushes (for autoconfiguration)

-

-

In Ontrack, create at least one GitHub configuration

-

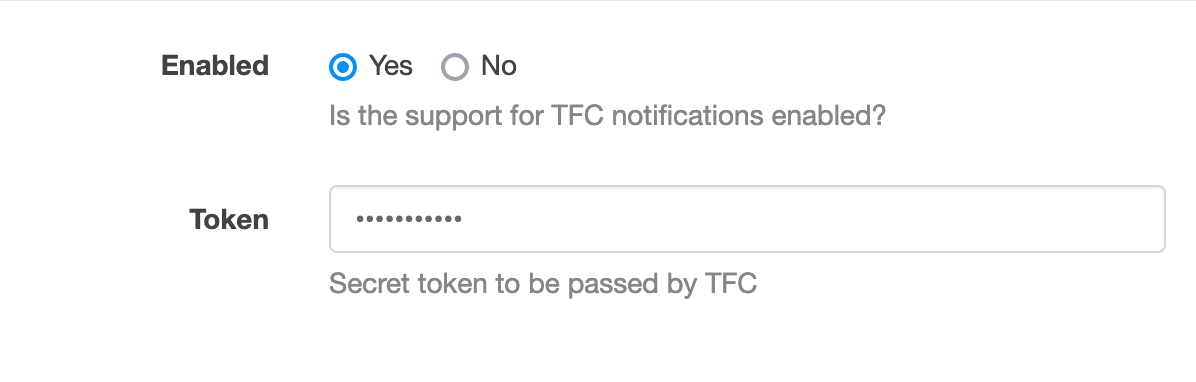

Still in Ontrack, go to the Settings > GitHub workflow ingestion section and set the token as generated in step (1)

From now on, everytime a working runs in GitHub, data about its steps will be created automatically in Ontrack.

7.4.2. Ontrack CLI & GitHub Actions

You can easily use the Ontrack CLI from your GitHub workflows by using the following actions:

-

nemerosa/ontrack-github-actions-cli-setup- install, configures and use the CLI to setup a project and branch in Ontrack based on GitHub information:

- name: Setup the CLI

uses: nemerosa/ontrack-github-actions-cli-setup@v2

with:

github-token: ${{ github.token }}

only-for: nemerosa

url: <ontrack-url>

token: ${{ secrets.ONTRACK_TOKEN }}

config: github.com

indexation: 120Creating a build

nemerosa/ontrack-github-actions-cli-build - creates a build based on GitHub information:

- name: Ontrack build

uses: nemerosa/ontrack-github-actions-cli-build@v1and if you want to add some release information:

- name: Ontrack build

uses: nemerosa/ontrack-github-actions-cli-build@v1

with:

release: ${{ env.VERSION }}This steps:

-

creates or updates the build

-

sets the commit property

-

sets the release property if provided

-

sets some run info

-

sets a link to the GH workflow

By default, the build name will be the workflow run number, you can change this by using the build input:

- name: Ontrack build

uses: nemerosa/ontrack-github-actions-cli-build@v1

with:

build: <your build name>Validating a build

-

nemerosa/ontrack-github-actions-cli-validation- creates a validation run for a build based on GitHub information:

- name: Ontrack build validation

uses: nemerosa/ontrack-github-actions-cli-validation@main

with:

step-name: Ontrack build

validation: BUILD

build: ${{ github.run_number }}

token: ${{ github.token }}Note that when nemerosa/ontrack-github-actions-cli-setup has been called into your workflow job, the Ontrack CLI becomes available in all subsequent steps and be used directly:

- name: Setup the CLI

uses: nemerosa/ontrack-github-actions-cli-setup@v1

with:

# ...

- name: Using the CLI

run: ontrack-cli ...Example of GitHub pipeline with actions

In your repository or organization, start by declaring the following elements:

-

a

ONTRACK_URLvariable, having the URL of your Ontrack installation -

a

ONTRACK_TOKENsecret, having an API token defined in Ontrack; the account linked to this token must have the necessary rights (typically administrator or automation)

In Ontrack itself, make sure to define at least one GitHub configuration.

In each job, start by setting up the Ontrack CLI:

- name: Setup the CLI

uses: nemerosa/ontrack-github-actions-cli-setup@v2

with:

github-token: ${{ github.token }}

url: ${{ vars.ONTRACK_URL }}

token: ${{ secrets.ONTRACK_TOKEN }}

config: github.com

indexation: 60| More options are available at https://github.com/nemerosa/ontrack-github-actions-cli-setup |

Once this step has been run, the Ontrack CLI is available in the rest of the workflow.

Typically, you’ll create a build in Ontrack:

- name: Ontrack build

run: |

ontrack-cli build setup \

--project ${{ steps.ontrack.outputs.project }} \

--branch ${{ steps.ontrack.outputs.branch }} \

--build ${{ github.run_number }}

ontrack-cli build set-property \

--project ${{ steps.ontrack.outputs.project }} \

--branch ${{ steps.ontrack.outputs.branch }} \

--build ${{ github.run_number }} \

git-commit \

--commit ${{ github.sha }}After the build is created, you can create some validations,

either using the Ontrack CLI

or using the dedicated ontrack-github-actions-cli-validation action.

For example:

- uses: nemerosa/ontrack-github-actions-cli-validation@v2

if: ${{ always() }}

the if: ${{ always() }} is there to run this step even if the previous step is failed.

|

Using the CLI, it can look like:

- name: Validation

run: |

ontrack-cli validate \

--project ${{ steps.ontrack.outputs.project }} \

--branch ${{ steps.ontrack.outputs.branch }} \

--build ${{ github.run_number }} \

--validation my-validation \

--status PASSEDIf you want to record some JUnit XML test results:

- name: Validation

if: ${{ always() }}

run: |

ontrack-cli validate \

--project ${{ steps.ontrack.outputs.project }} \

--branch ${{ steps.ontrack.outputs.branch }} \

--build ${{ github.run_number }} \

--validation my-validation \

junit \

--pattern "**/results/*.xml"Setting up validations and promotions

The ontrack-github-actions-cli-setup action allows the setup of validations and promotions:

- name: Setup the CLI

uses: nemerosa/ontrack-github-actions-cli-setup@v2

with:

# ...

promotions: .github/ontrack/promotions.yamlwhere .github/ontrack/promotions.yaml looks like:

BRONZE:

validations:

- BUILD

SILVER:

validations:

- ACCEPTANCE

promotions:

- BRONZEThis setup action does not allow much customization yet, and one needs to rely on the Ontrack CLI for more options (typing of the validations, auto-promotion, etc).

8. Features

8.2. Managing branches

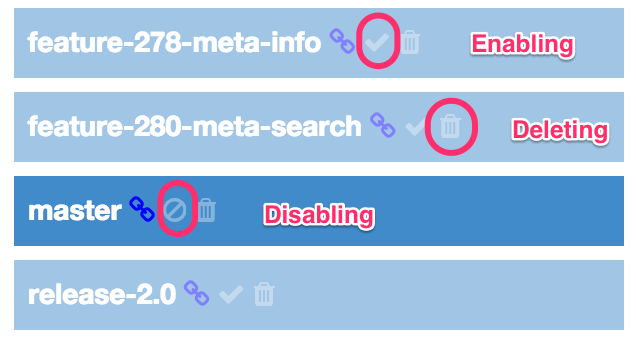

8.2.1. Managing the branches in the project page

If you click on the Show all branches button in the project page, you can display all the branches, including the ones being disabled.

According to your authorizations, the following commands will be displayed as icons just on the right of the branch name, following any other decoration:

-

disabling the branch

-

enabling the branch

-

deleting the branch

This allows you to have quick access to the management of the branches in a project. Only the deletion of a branch will prompt you about your decision.

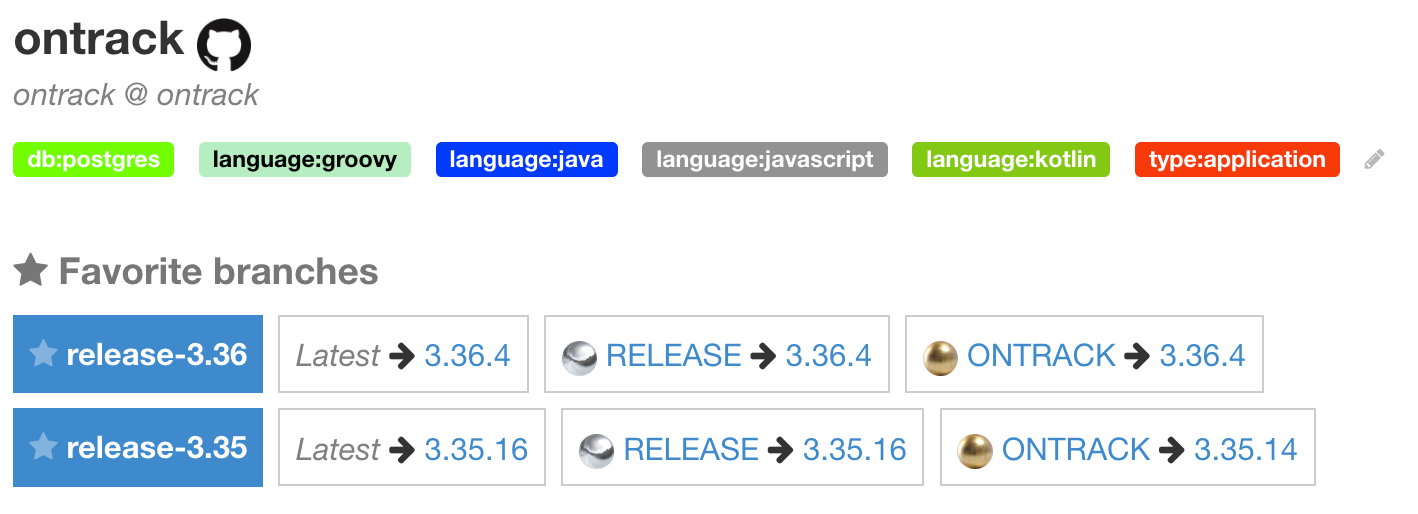

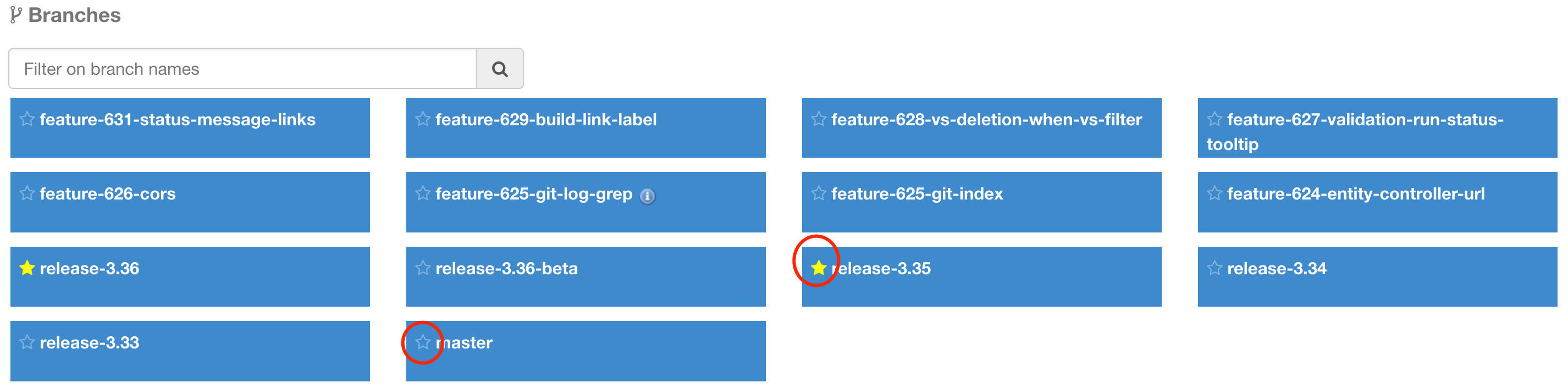

8.2.2. Branch favorites

Instead of selectioning a project as a favorite, one might find more convenient to select branches only.

This reduces the clutter on the home page when projects tend to have a lot of branches.

All favorite branches do appear on the home page, together with any favorite project:

The favorite branches of a given project do also appear on the project page:

In both cases, following information is displayed:

-

latest build

-

latest build per promotion

|

Branches can be unselected as favorite using the star left of their name. |

In order to select a branch as favorite, use the little star left of its name in the branch list in the project page:

|

You can use this star to unselect it as well. When selected, the star is marked as yellow. |

8.2.3. Pull requests

When Git is enabled for a project, the Ontrack branches can point to either regular Git branches or to pull requests when this feature is enabled.

See [git-pull-requests] for more information.

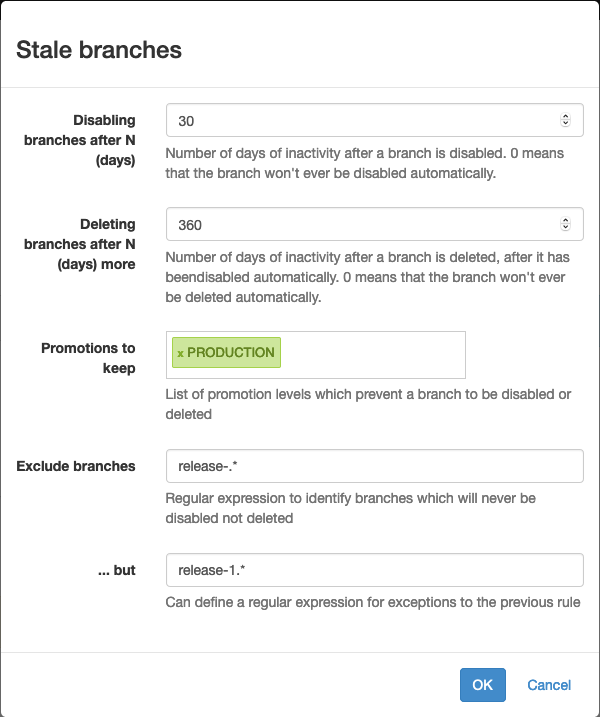

8.2.4. Managing stale branches

By default, Ontrack will keep all the branches of a project forever. This can lead to a big number of branches to be displayed.

You can configure a project to disable branches after a given number of days has elapsed since the last build, and then to delete them after an additional number of days has elapsed again.

To configure this:

-

go to the project page

-

select the Stale branches property and add it:

-

set the number of days before disabling and the number of days before deleting

If the disabling days are set to 0, no branch will be ever disabled or deleted.

If the deleting days are set to 0, no branch will ever be deleted.

You can also set a list of promotion levels - a branch which is or has been promoted to such a promotion level will not be eligible for being disabled or deleted.

In the sample above, the stale branches will be disabled after 60 days (not

shown any longer by default), and after again 360 days, they will be deleted

(so after 390 days in total). Branches which have at least one build being promoted to PRODUCTION will not

be deleted or disabled.

Additional, two regular expressions can be used to add further protection against the disabling & deletion of the branches:

-

the first one ("includes") is used to select the branches which are not eligible to disabling and deletion

-

the second expression ("excludes") can be used to refine the first expression, by matching branches which still must be disabled or deleted.

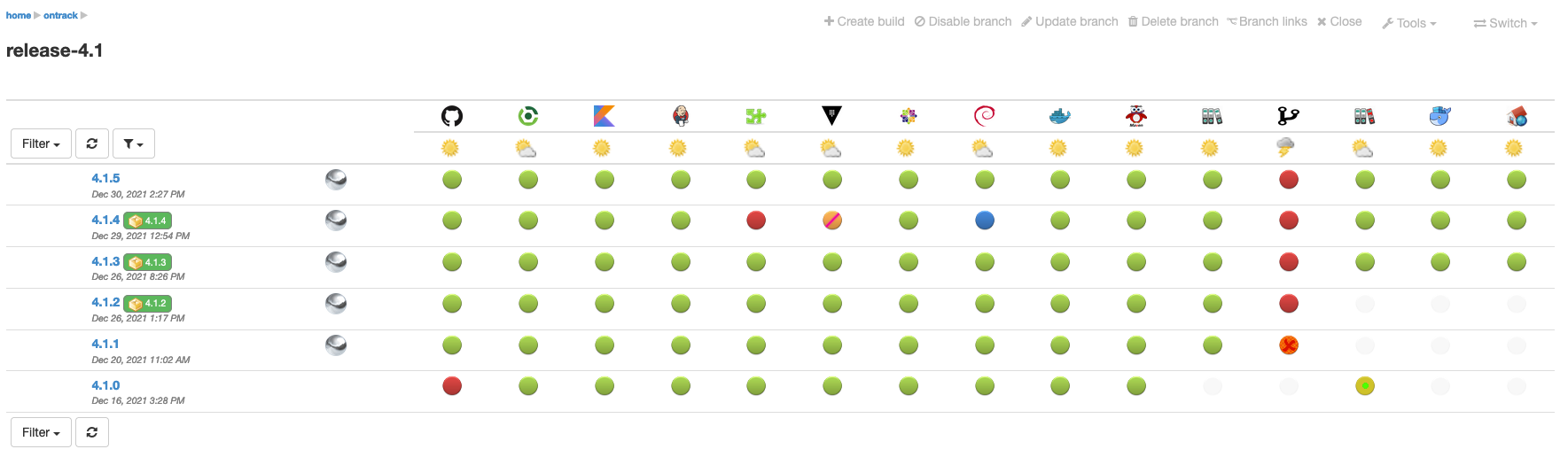

8.2.5. Validation stamp filters

When a branch defines many validation stamps, the view can become cluttered and not really useful any longer, because displaying too much information.

Validation stamp filters can be defined to restrict the view to a set of known validation stamps.

Using filters

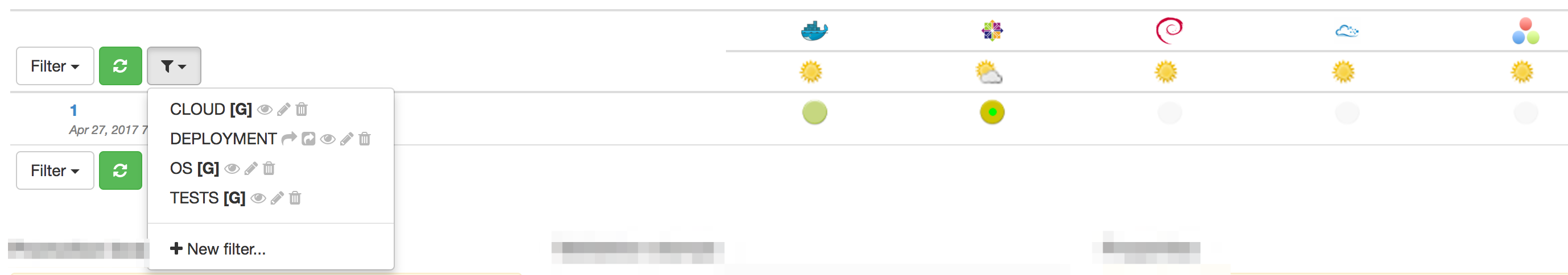

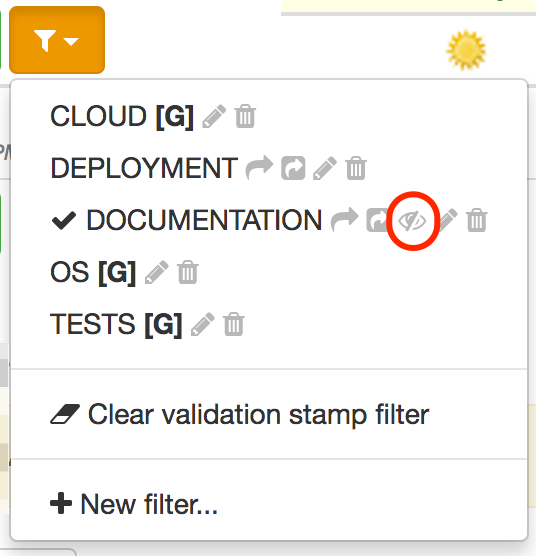

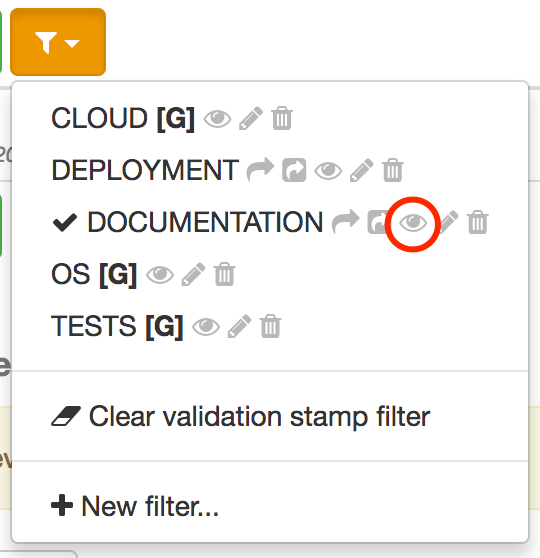

Validation stamp filters can be selected in the branch view, just on the left of the list of validation stamp headers:

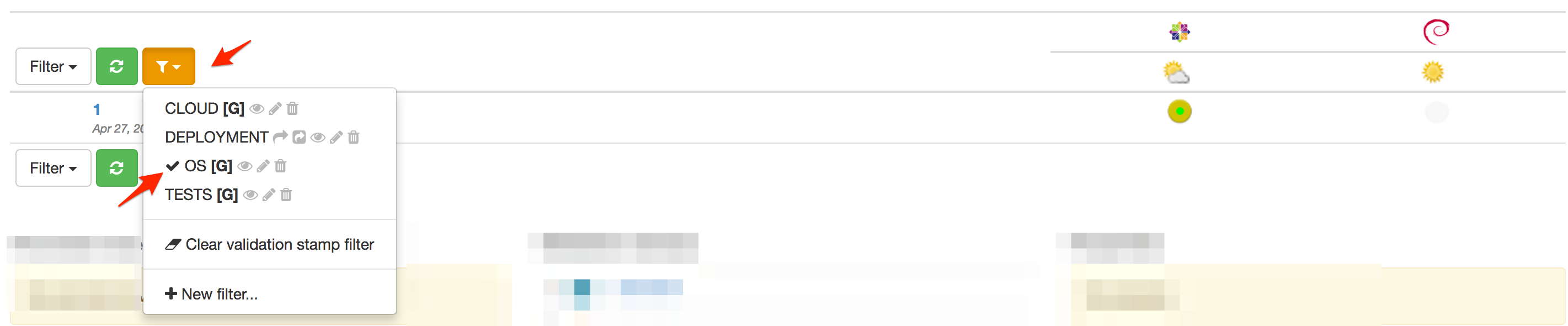

When a filter is selected, it is marked as such and only associated validation stamp columns are shown in the view:

The validation stamp filter menu is also marked in orange to indicate that a filter has been applied.

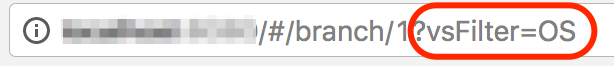

When the filter is applied, its name appears also in the URL. This can be used as a permalink:

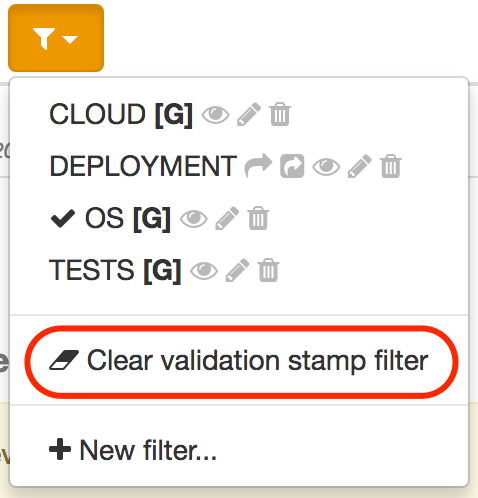

You can remove the filter by selecting Clear validation stamp filter in the filter menu:

Editing filters

|

Only authorized users are allowed to edit the validation stamp filters for a branch. See Authorisations for more details. |

A validation stamp filter is defined by:

-

a name

-

a list of validation stamp names to include

While it is possible to edit a filter using a dialog (see later), it is far easier to use the in-place editor.

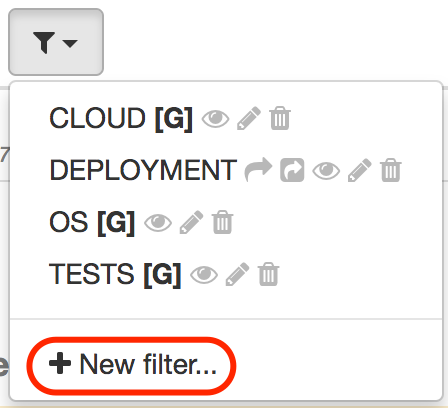

Start by creating a validation stamp filter by selecting the New Filter… entry in the filter menu:

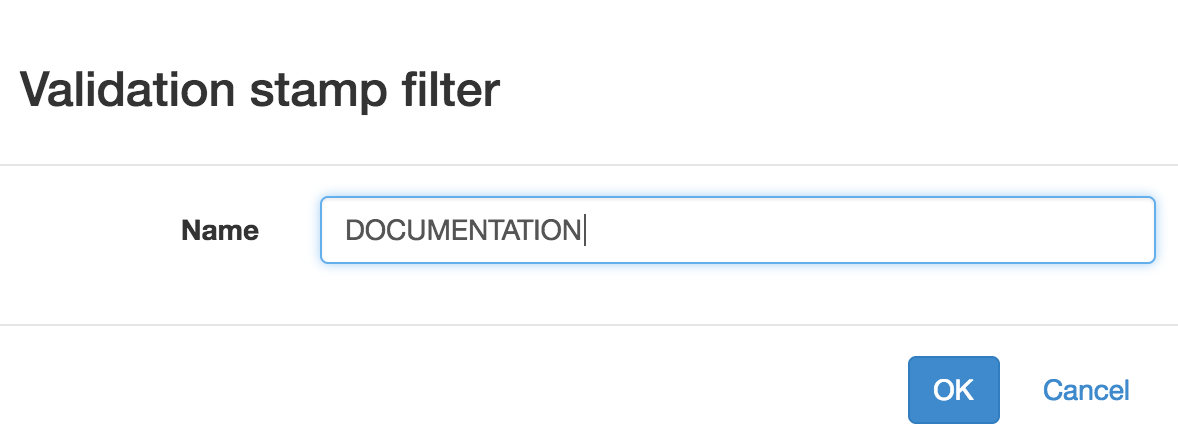

This displays a dialog to create the new filter:

| Only the name is required and all current validation stamps filters are included by default. |

When created, the filter can be directly edited in-place:

The following actions are possible:

-

by clicking on the Select none button, no validation stamps is associated with the filter.

-

by clicking on the Select all button, all validation stamps are associated with the filter.

-

by clicking on the Done with edition button, the in-place edition stops and the normal display is resumed

You can also click on a validation stamp to remove it or to add it to the filter.

In case the validation stamp is associated with the filter, a minus icon appears close to its name. It it is not associated, the icon is dimmed and a plus icon appears:

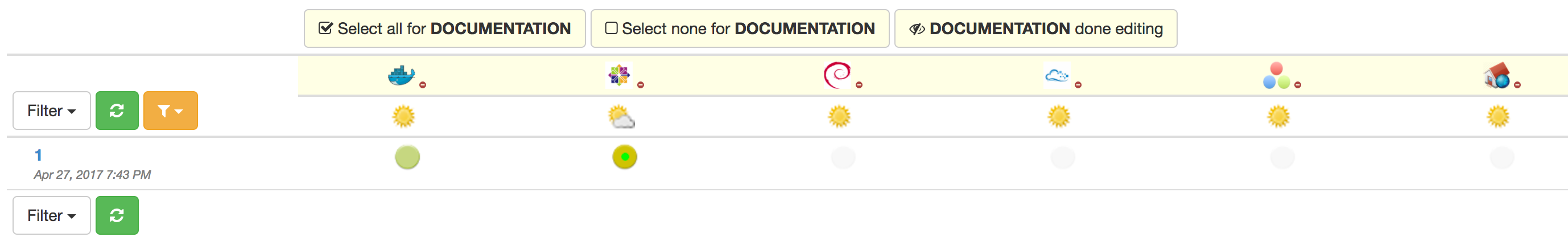

Note that you can also stop the edition by selecting the eye icon in the menu:

To start editing an existing filter, just click also on the eye icon close to its name:

| Select any other filter, or removing the filter, will also stop the in-place edition. |

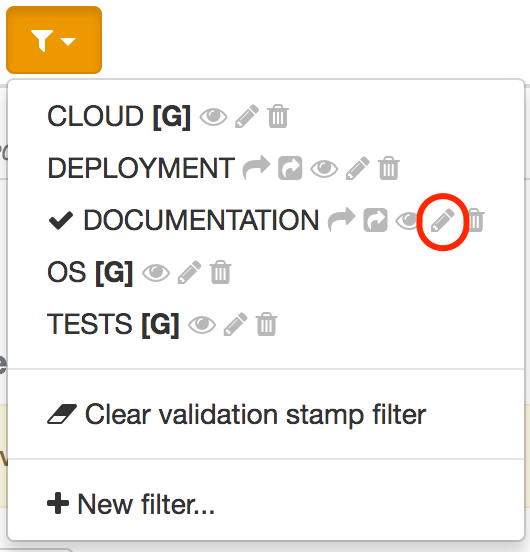

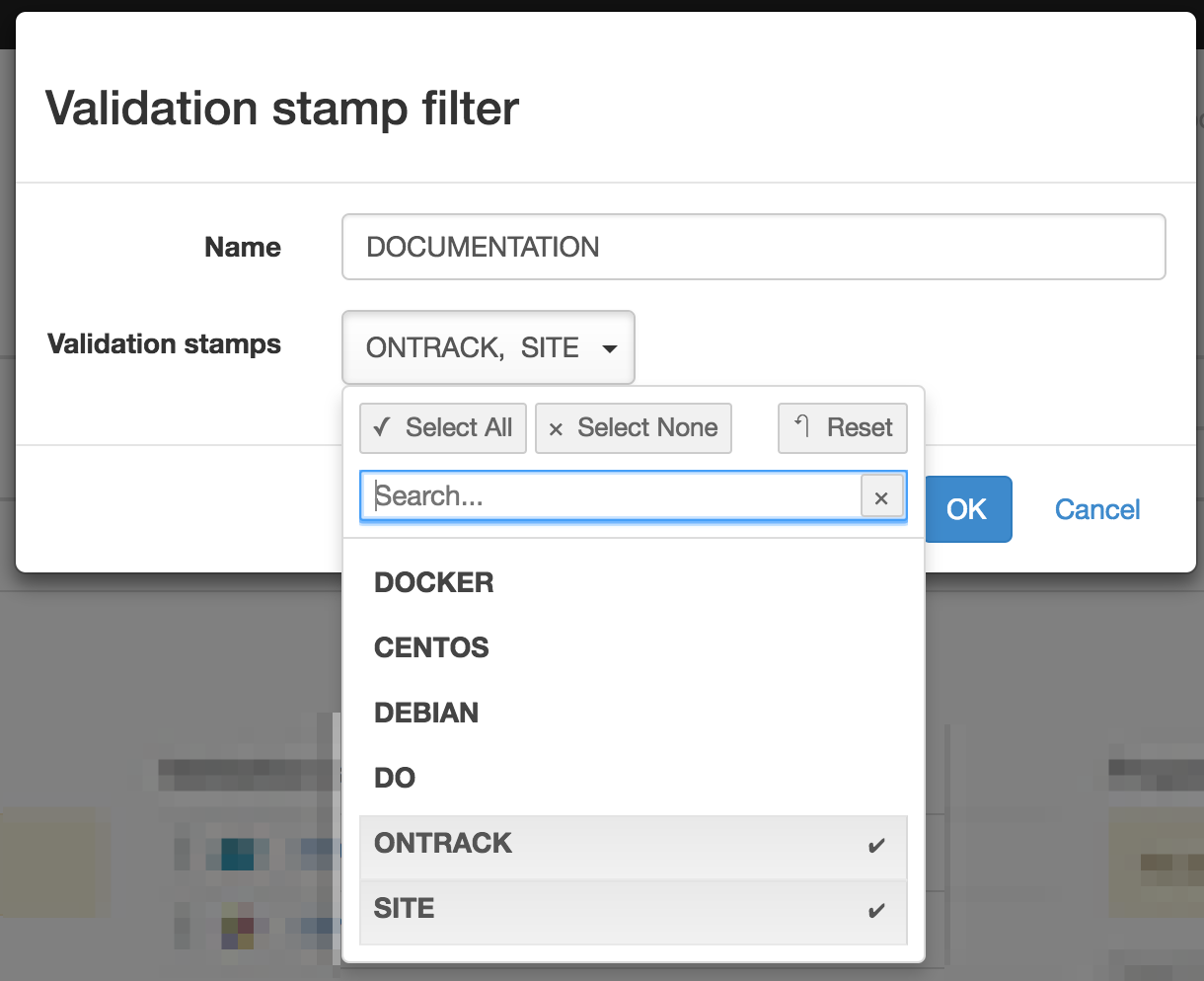

To edit a filter directly, you can also select the pencil icon and edit the filter using a dialog:

This displays an edition dialog allowing to change the name and the list of validation stamps.

|

For a filter associated with a branch (see below, sharing), names can be selected among the validation stamps of the branch. For a filter associated with a project, the list of validation stamps for all the branches is available. For a global filter, names are no longer selected but must be edited. |

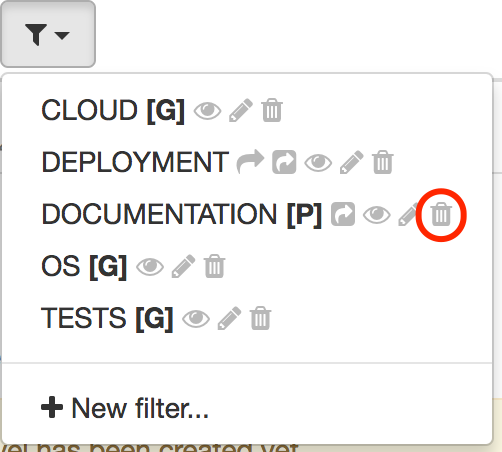

Finally, to delete a filter, click on the trash icon:

| A confirmation will be asked before the deletion actually occurs. |

Sharing

A filter is created by default at branch level and is only visible when the associated branch is displayed.

An authorized user can:

-

share the filter at project level - in this case, the filter is available for all the branches of the project

-

share the filter at global level - in this case, the filter is available for all projects and all branches

A filter shared at project level is shown with a [P] close to its name

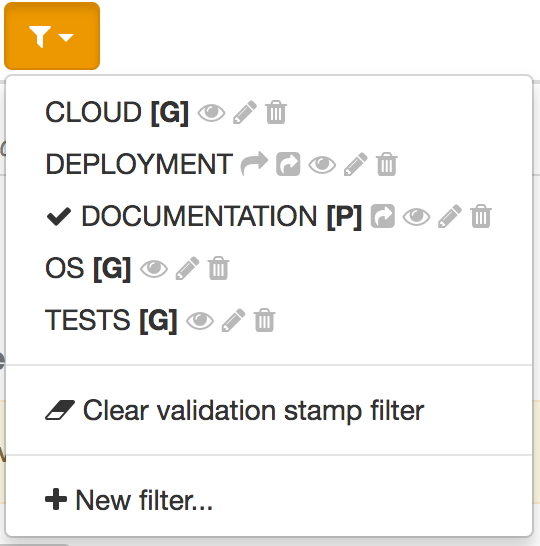

and a global filter with a [G]:

In the screenshot above:

-

DEPLOYMENTis associated with the current branch -

DOCUMENTATIONis associated with the project -

the other filters are global

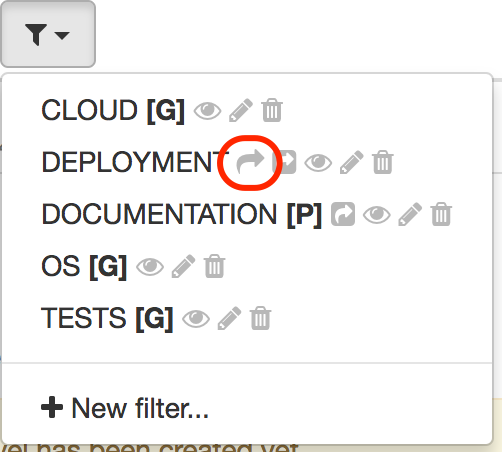

To share a filter at project level, click on the share icon:

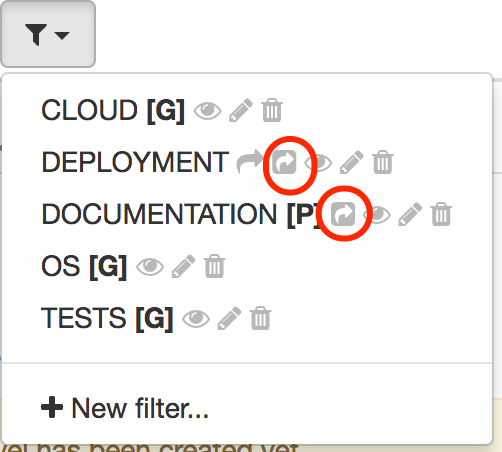

To share a filter at global level, click on the share icon:

Authorisations

According to the role of the authenticated used, following actions are possible:

| Scope | Action | Participant | Validation stamp manager | Project manager/owner | Administrator |

|---|---|---|---|---|---|

Branch |

Create |

Yes |

Yes |

Yes |

Yes |

Branch |

Edit |

Yes |

Yes |

Yes |

Yes |

Branch |

Delete |

Yes |

Yes |

Yes |

Yes |

Branch |

Share to project |

No |

Yes |

Yes |

Yes |

Project |

Edit |

No |

Yes |

Yes |

Yes |

Project |

Delete |

No |

Yes |

Yes |

Yes |

Project |

Share to global |

No |

No |

No |

Yes |

Global |

Edit |

No |

No |

No |

Yes |

Global |

Delete |

No |

No |

No |

Yes |

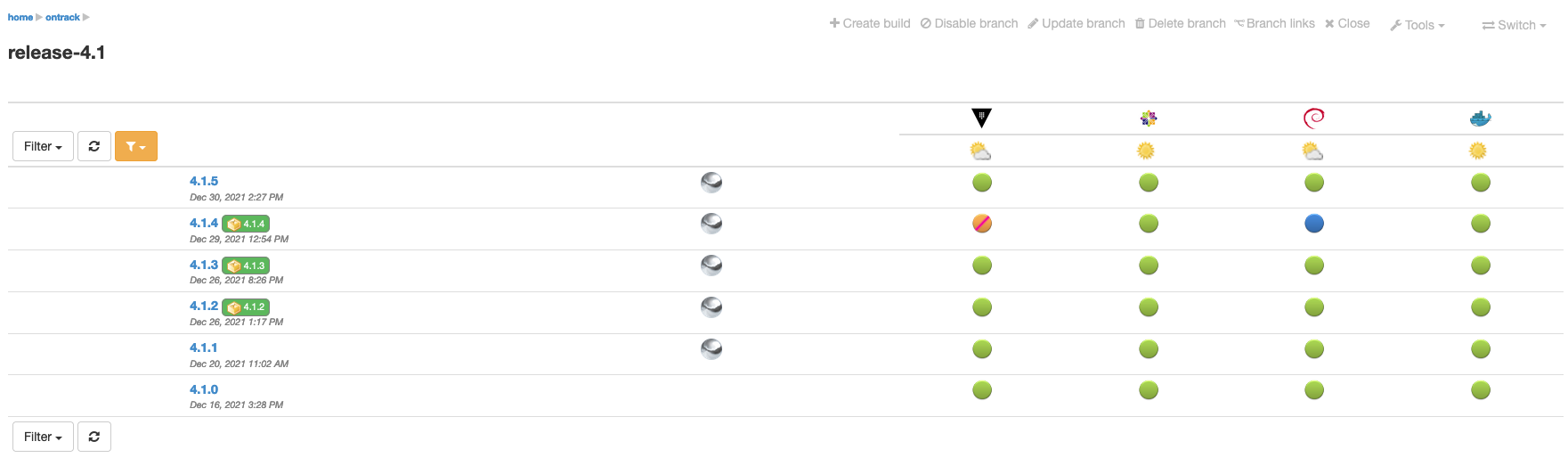

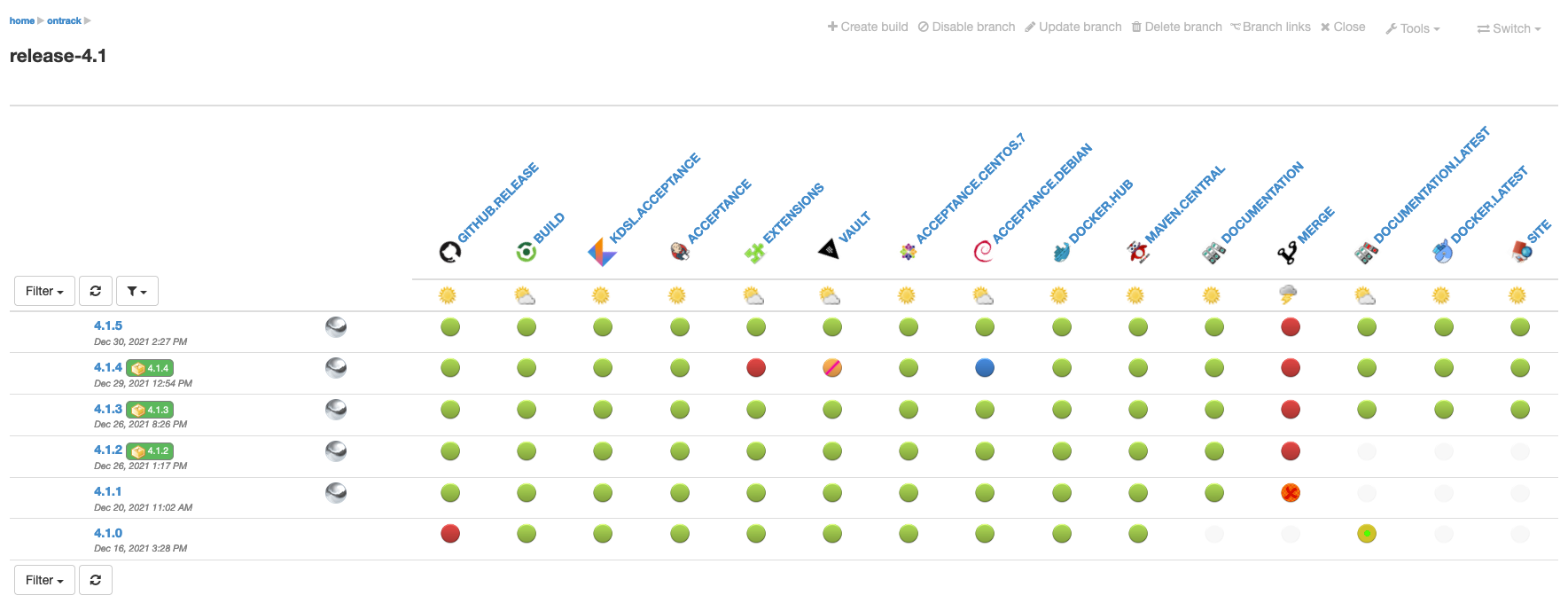

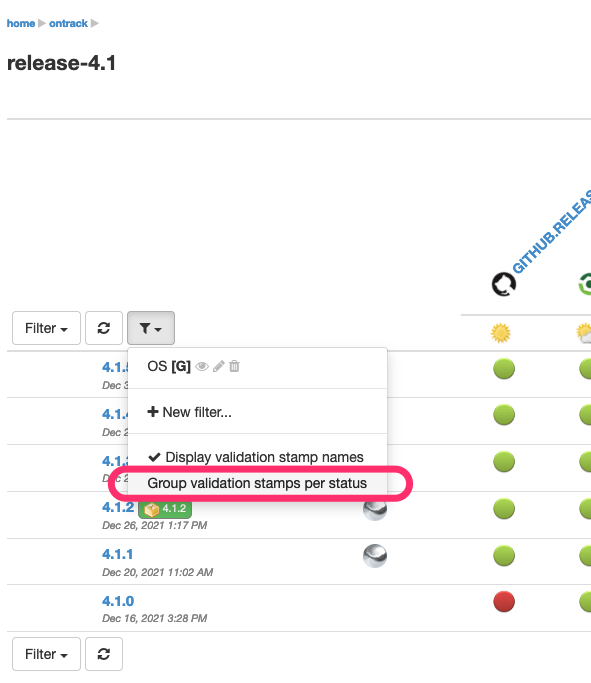

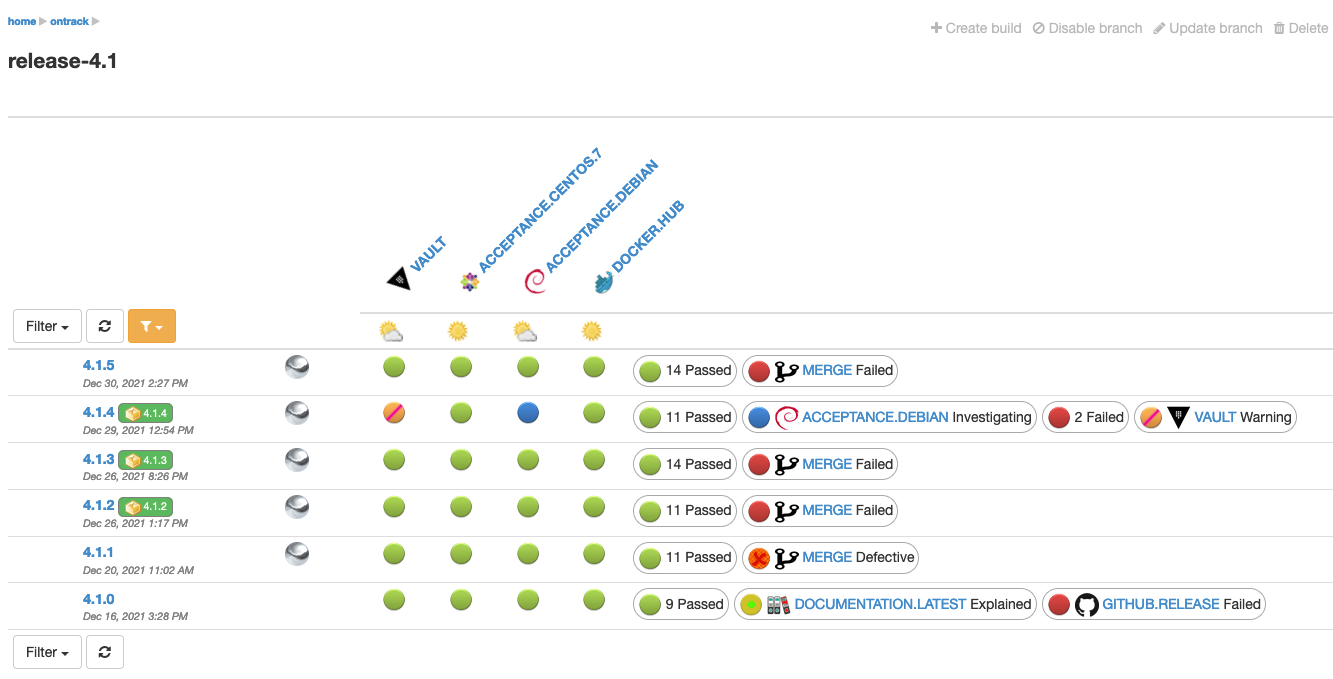

8.2.6. Validation stamp display options

In the branch view, you can tune the display of the validations using different options.

By default, you get all the validation stamps of the branch:

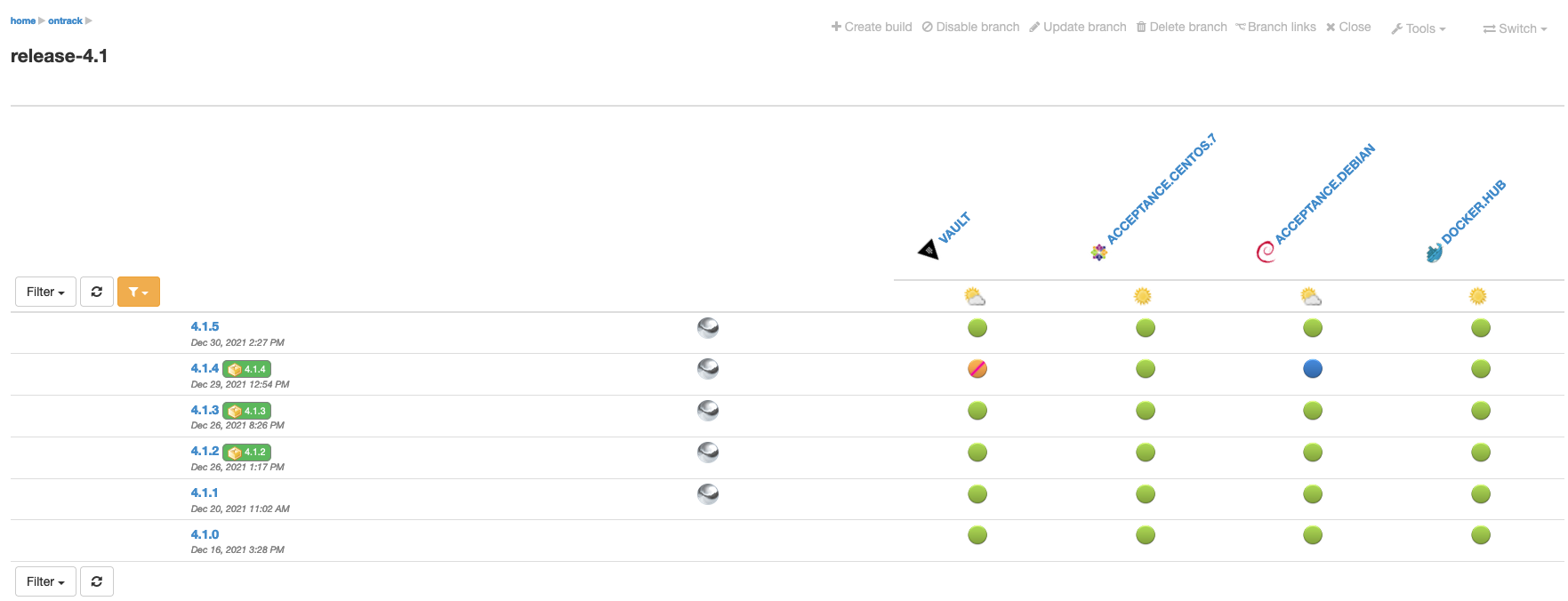

You can restrict the number of validation stamps being displayed by using a validation stamp filter:

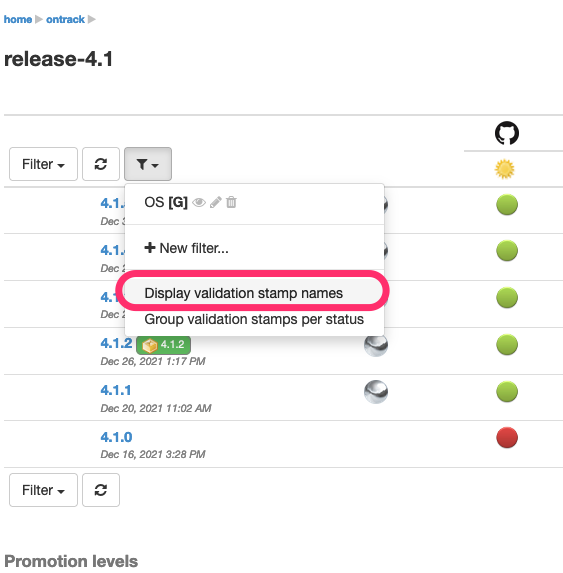

Additionally, you can display the names of the validation stamps by selecting the Display validation stamp names option in the validation stamp filter menu:

This displays:

or with a filter:

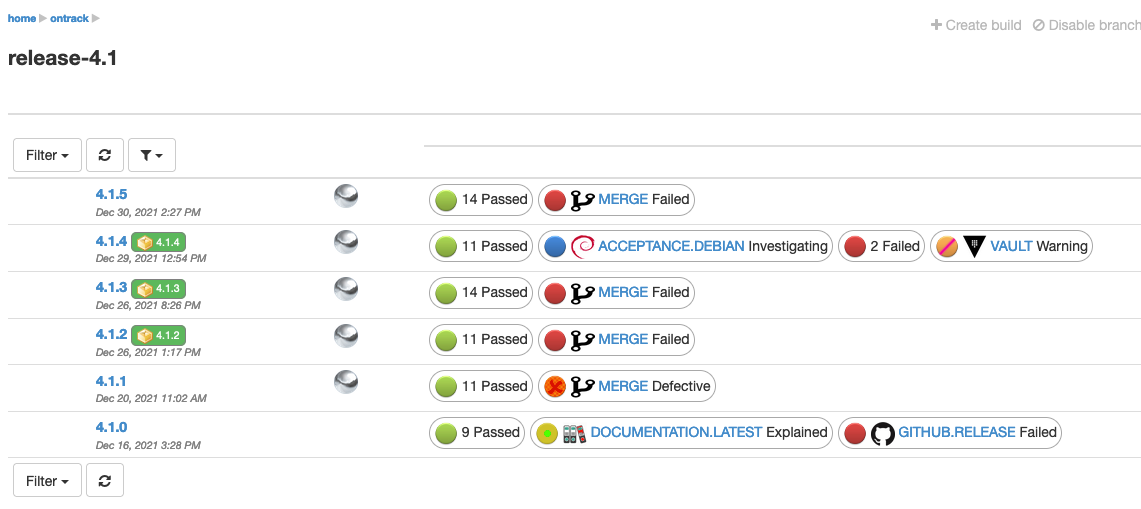

Finally, when dealing with too many validation stamps for the display to be OK, you can choose to group validations per status:

And this displays:

When using a validation stamp filter, the validation stamps matched by the selected filter are always displayed, on the left of the groups:

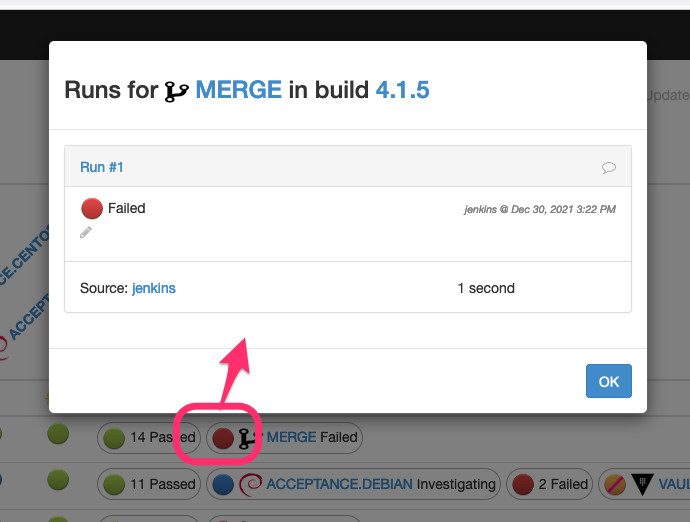

If one validation has a particular status, clicking on the validation displays the latest validation run, and from there, you can progress its status and/or enter comments:

When several validations have the same status, the dialog will display the list of validations having this status. Clicking on a validation will then display the latest validation run for this validation:

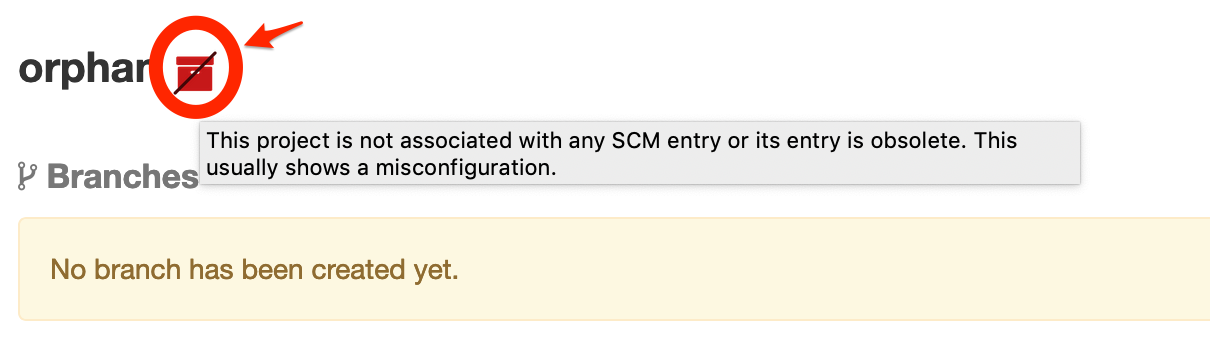

8.3. Working with SCM

Source Control Management (SCM) is at the core of Continuous Integration and Continuous Delivery chains. It’s therefore not a surprise that they play a major role in Ontrack.

8.3.1. SCM Catalog

Ontrack allows to collect information about all registered SCMs and to correlate this information with the Ontrack projects.

Model

A SCM Catalog entry represents a physical SCM repository which is accessible by Ontrack. An entry contains the following information:

-

SCM - type of SCM, like

githuborbitbucket. -

Configuration - associated configuration in Ontrack to access this repository (URL, credentials, etc.).

-

Repository - identifier for this repository. It depends on the type of SCM. For example, for GitHub, it can be name of the repository, like

nemerosa/ontrack.

A SCM catalog entry can be:

-

linked if an Ontrack project exists which is associated to this repository

-

unlinked otherwise

Some Ontrack projects are orphan if they are not associated with any repository accessible by Ontrack or if their associated repository is not accessible.

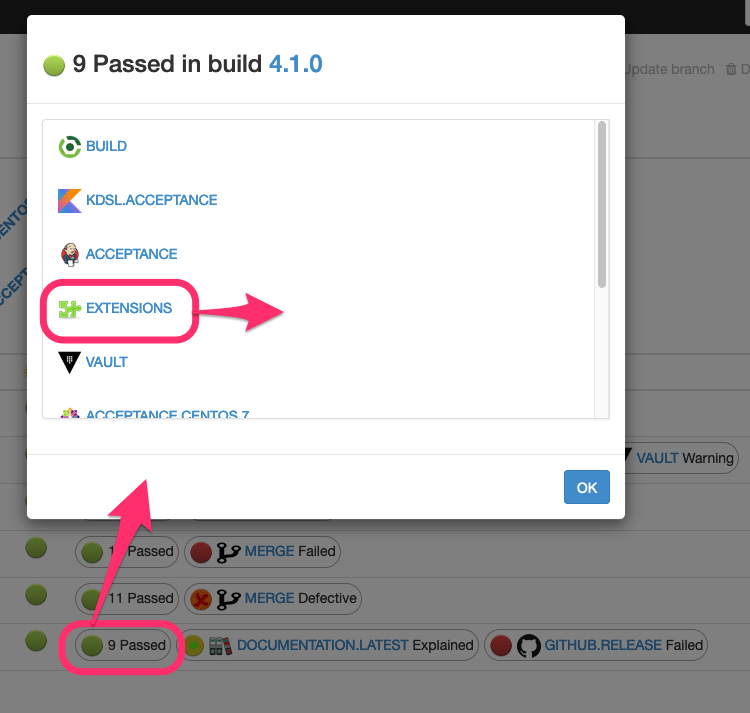

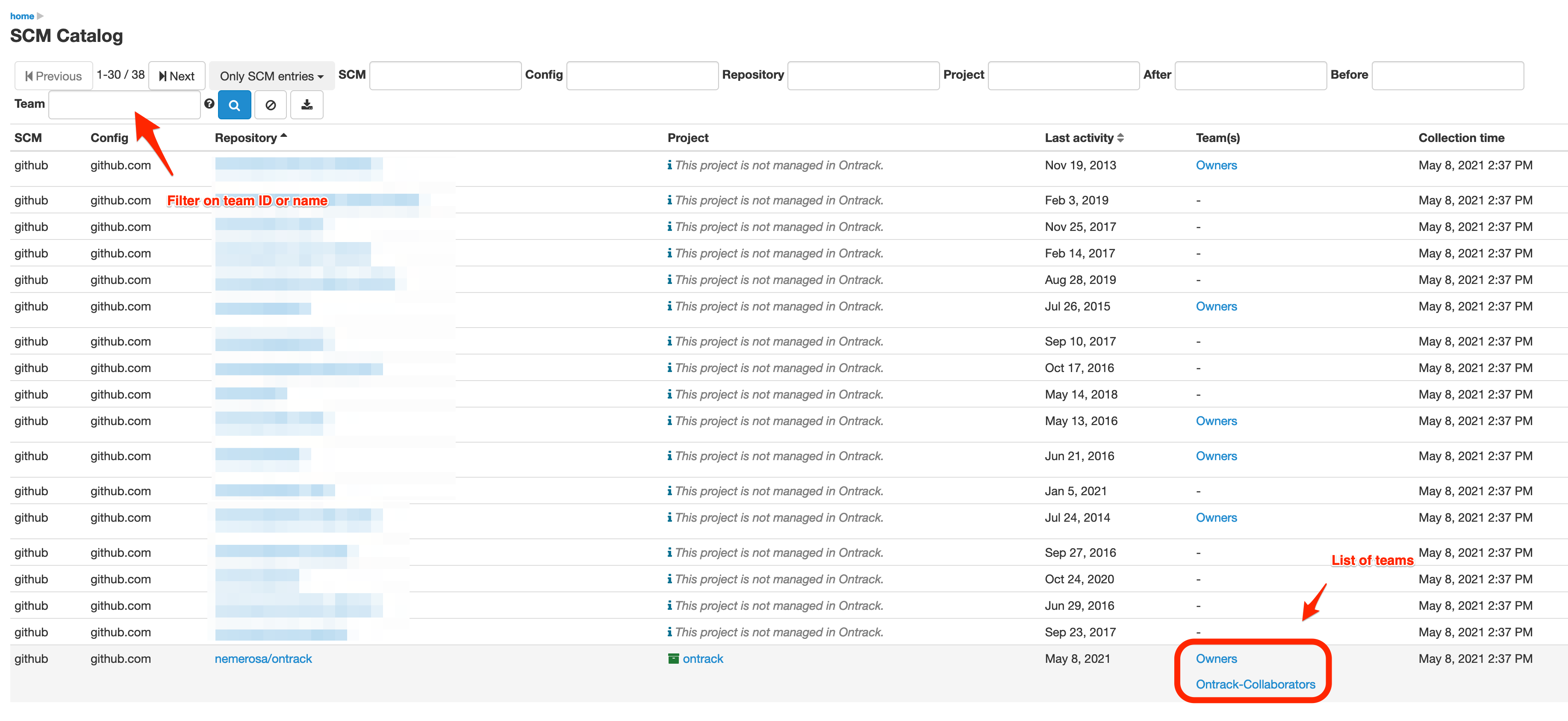

SCM Catalog list

To access the SCM catalog, you must be logged in. You must select the SCM Catalog item in your user menu.

The list looks like:

The Project column indicates if the entry is linked or unlinked. In case it is linked, a link to the Ontrack project page is available.

Filtering is possible using text boxes at the top. You can also navigate back and forth in the list using the Previous and Next buttons.

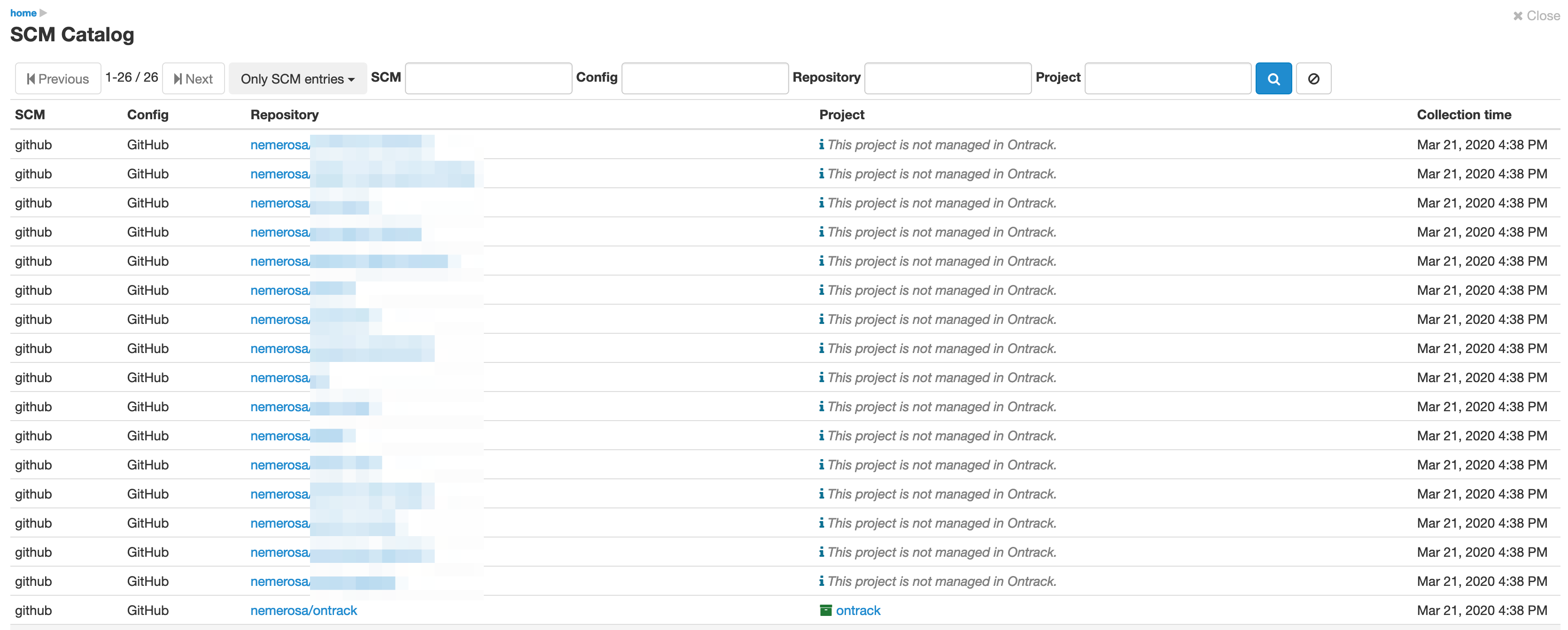

The main filter, labelled Only SCM entries, allows to select the type of entry:

-

Only SCM entries - selected by default, shows all repositories accessible by Ontrack

-

All entries and orphan projects - additionally, shows the orphan projects

-

Linked entries only - shows only the entries which are linked to projects

-

Unlinked entries only - shows only the unlinked entries

-

Orphan projects only - shows only the orphan projects, as shown below:

In this case, only the link to the project is available since no repository information is accessible.

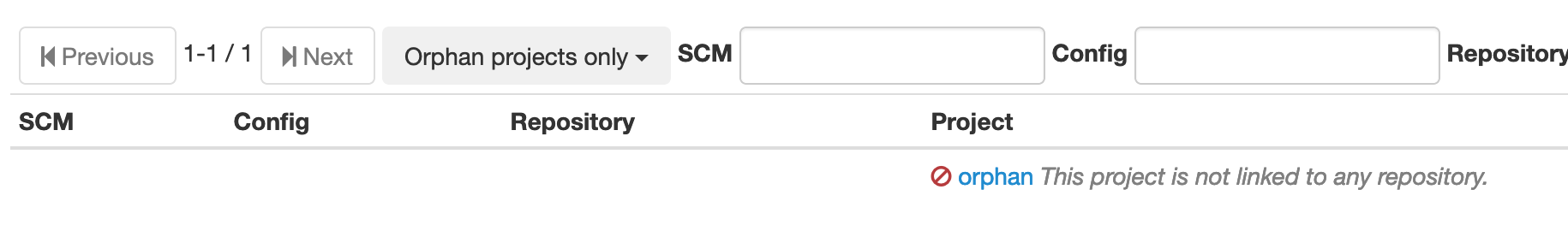

Orphan project decoration

Since orphan projects are an anomaly (because every Ontrack project should be associated with some kind of SCM), they get a special decoration, so that they can easily be identified (and fixed):

Project labels

If the collection of project labels is enabled, the following labels will be set for projects:

-

scm-catalog:entrywhen the project is associated with a SCM Catalog entry -

scm-catalog:no-entrywhen the project is NOT associated with a SCM Catalog entry

Those labels can be used to filter orphan projects on the home page for example, or in GraphQL queries.

Teams

For SCM which allow for this, the SCM catalog collects the list of teams for each repositoy.

|

The teams collection is only enabled for GitHub right now. When trying to get the teams for GitHub, the GitHub token needs to have |

The teams do appear in the SCM catalog list and can be used to filter the SCM catalog entries:

Each team is associated with a link to its page in the SCM.

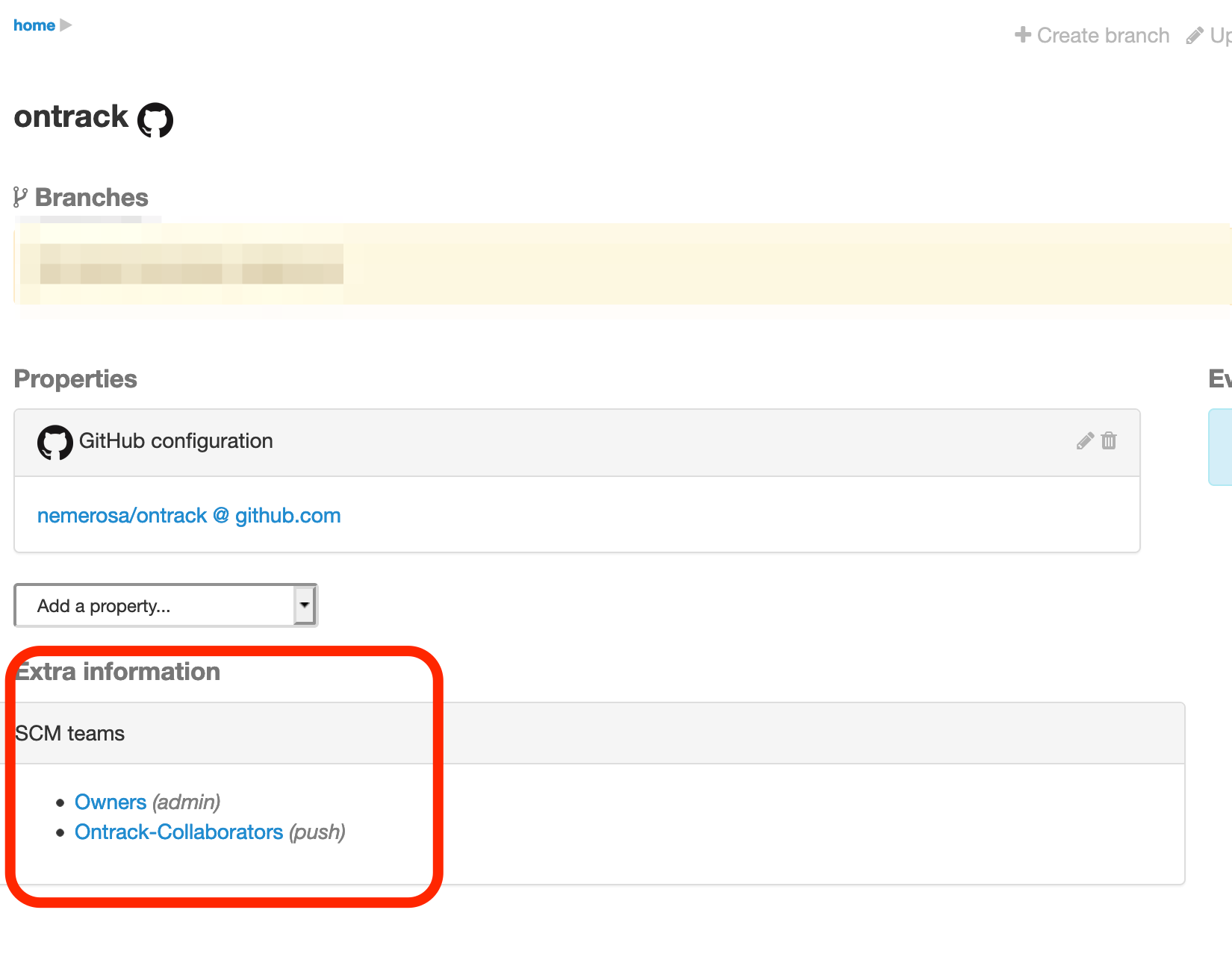

The teams information is also available in the Ontrack project page:

On this page, the role of the team for this project is also displayed, but usually, more details can be accessed by following the link to the SCM team page.

Catalog synchronization

Synchronization between the SCM and Ontrack can be enabled:

-

creating an Ontrack project automatically from an SCM repository

-

disabling Ontrack projects when their repository is no longer present

These two synchronizations are distinct and configured with their own flag in the SCM Catalog Synchronization settings.

GraphQL schema

The SCM Catalog is accessible through the Ontrack GraphQL schema.

At root level, the scmCatalog query allows to query the SCM

Catalog itself and to filter the catalog.

For example, to get the list of orphan projects:

{

scmCatalog(link: "ORPHAN") {

pageItems {

project {

name

}

}

}

}or to get the entries which are unlinked:

{

scmCatalog(link: "UNLINKED") {

pageItems {

entry {

scm

config

repository

repositoryPage

}

}

}

}| See the GraphQL schema documentation for more fields and filters. |

Additionally, the scmCatalogEntry field is available on the Project tpe

to provide information about any associated SCM Catalog entry:

{

projects(name: "ontrack") {

scmCatalogEntry {

scm

config

repository

repositoryPage

}

}

}Metrics

The following metrics are available:

-

ontrack_extension_scm_catalog_total(gauge) - count of SCM catalog entries + orphan projects -

ontrack_extension_scm_catalog_entries(gauge) - count of SCM catalog entries -

ontrack_extension_scm_catalog_linked(gauge) - count of linked SCM catalog entries -

ontrack_extension_scm_catalog_unlinked(gauge) - count of unlinked SCM catalog entries -

ontrack_extension_scm_catalog_orphan(gauge) - count of orphan projects

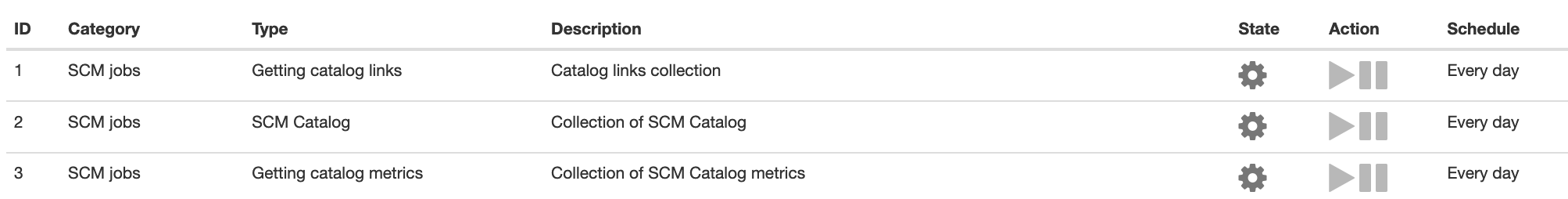

Administration

This feature is enabled by default but can be controlled using some administrative jobs:

-

Collection of SCM Catalog - gets the list of repositories accessible from Ontrack. Runs once a day.

-

Catalog links collection - gets the links between the projects and associated SCM repositories. Runs once a day.

-

Collection of SCM Catalog metrics - computes some metrics about the SCM catalog

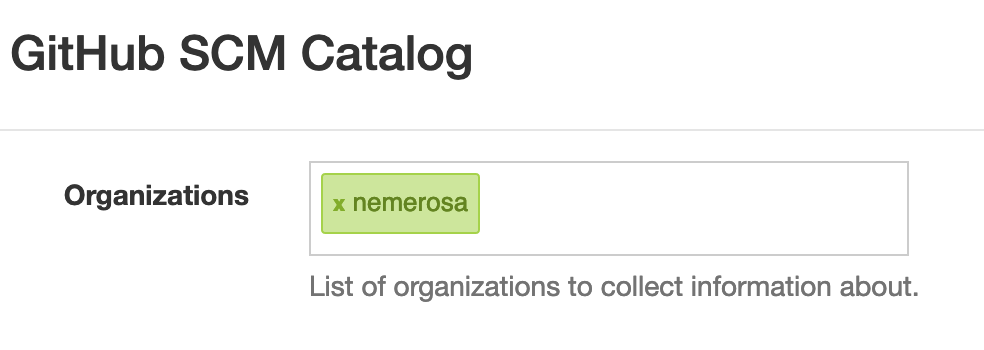

Specific configuration for GitHub

The GitHub repositories are not collected unless their organization is specifically allowed. By default, none are.

In order to enable the scanning of a GitHub organization,

log as administrator, go to the Settings, scroll to the

GitHub SCM Catalog section and enter the names of the

organizations to authorise for collection. For example, below,

only the nemerosa organization is allowed:

8.4. Workflows

Workflows allow the execution of several actions orchestrated in a DAG (directed acyclic graph).

|

As of version 4.8, workflows can only be triggered using the Notifications. There is already some partial and undocumented support through API calls to run some standalone workflows but this is very experimental. |

8.4.1. Workflows definitions

To run a workflow, you can define a notification whose channel is workflow.

This can be done through the UI or as code.

|

Workflows definitions in the UI is only supported in the Next UI of Ontrack and won’t be supported in the legacy UI. |

A workflow:

-

has a name, used for information and display purposes

-

has a list of nodes

Each node:

-

has an ID which must be unique inside the workflow

-

an executor ID that points to a workflow node executor

-

some data for the workflow node executor

-

a list of parent nodes

The list of parent nodes is what defines the workflow DAG.

|

When defining or running workflows, graph cycles are automatically detected. |

Workflows notifications can be defined as code, like all other notifications.

For example:

channel: workflow

channelConfig:

workflow:

name: My workflow

nodes:

- id: ticket

executorId: notification

data:

channel: jira-creation

channelConfig:

# Configuration for the ticket creation

- id: mail

executorId: notification

parents:

- id: ticket

data:

channel: mail

channelConfig:

# Configuration for the mail

template: |

Link to ticket: ${workflow.ticket?path=url}8.4.2. Workflows nodes executors

A workflow node executor is a component which is responsible to "run a node".

See Workflow nodes executors for a list of all existing workflow node executors.

8.4.3. Workflow templating

Many elements in the workflow definition are subject to templating.

The workflow name is itself considered as a template when being run as a notification (which is the default in 4.8).

When using notifications as node executors, the configuration elements are templates as usual.

Note that for a workflow notification, the event is passed as a context element and all template functions and sources are available.

Additionally, when a notification is run as part of a workflow, a new templating function is available: workflow.

This function allows the access to the output data of any successful node in the workflow.

For example, let’s take a workflow which:

-

creates a ticket in Jira

-

then send a link to this ticket with an email

channel: workflow

channelConfig:

workflow:

name: My workflow

nodes:

- id: ticket

executorId: notification

data:

channel: jira-creation

channelConfig:

# Configuration for the ticket creation

- id: mail

executorId: notification

parents:

- id: ticket

data:

channel: mail

channelConfig:

# Configuration for the mail

template: |

Link to ticket: ${workflow.ticket?path=url}The ticket node runs and set some information in its output (see Jira ticket creation (jira-creation) for the full details), including a url property.

Then, the mail node is run and is using the notification workflow node executor again, with the mail channel being configured to send a mail.

This channel can use the template for the mail’s body and is using the workflow function to get the output of the ticket node and the url property of its output.

8.4.4. Workflows management

The progress of running workflows can be accessed in Information > Workflow audit.

Clicking on a workflow displays more details about its current status, node per node.

When using the workflow notification channel, the workflow status link is also accessible from the Information > Notification recordings, when selecting the notification.

8.4.5. Workflows settings

Workflow statuses are saved by default for 14 days.

To change this value, you can go to System > Settings > Workflows.

This can also be defined as code using CasC:

ontrack:

config:

settings:

workflows:

retentionDuration: 1209600000 # 14 days in ms8.5. Delivery metrics

One of the core features of Ontrack is the assignment of promotion levels to some builds, either explicitly from the CI or through auto promotion.

Delivery metrics are about measuring the performance and stability of these promotions on four different axes:

-

lead time to promotion - how long does it take from the moment a build is created to the moment it is promoted to a given level? This gives an indication on the performance of your delivery.

-

frequency - how many promotions do you get over a given period of time? This gives an absolute indicator about how often your delivery process performs.

-

success rate - what is the percentage of builds reaching a given promotion level? While 100% is not a goal (because of the very nature of a delivery pipeline, where failure is expected when finding actual issues), high values indicate a high stability of your delivery process.

-

time to restore - given a build which is not promoted, how long does it take to restore this promotion? The time it takes to fix an issue is a strong indicator of the resilience of your delivery process.

These metrics are valid for:

-

one project

-

one branch

-

one promotion level

Additionally, Ontrack distinguishes between:

-

single project metrics - where we measure the performance of a promotion level within the same project

-

end-to-end project metrics - where we measure the performance of a promotion level across several projects, by following the build links. Single project metrics are a special case of end-to-end project metrics.

8.5.1. Single project delivery metrics

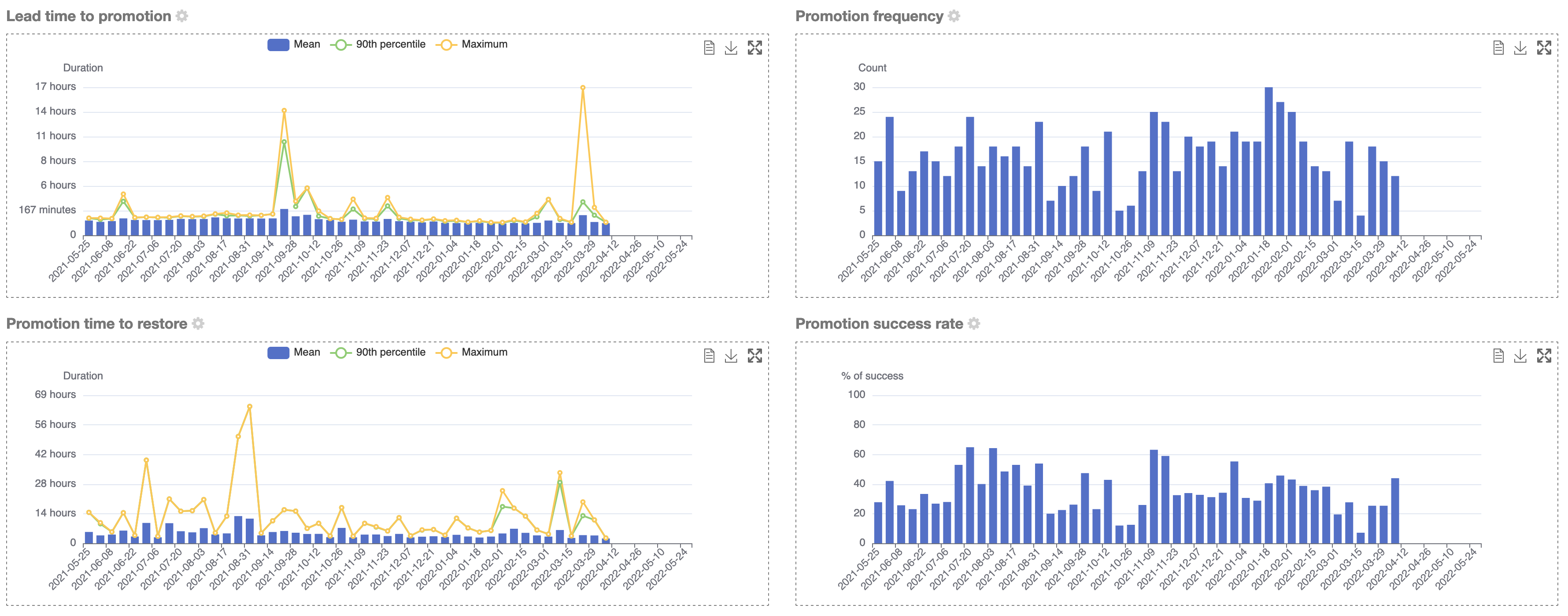

Navigate to any promotion level. Four charts are displayed, one for each of the axes:

-

lead time, with mean, 90th percentile & maximum

-

frequency, count of promotions over the selected period

-

success rate

-

time to restore, with mean, 90th percentile & maximum

For each chart, you can:

-

visualize the data

-

export the chart as an image

-

put the chart fullscreen

You can select the interval and the period (cog icon next to the chart title). These settings are valid for all the charts for all the promotions.

Additionally, you also have two charts a validation stamp level:

-

how long lasts the validation?

-

how stable it is?

|

For API access to the single project delivery metrics, consider using the exported End-to-end project delivery metrics. |

8.5.2. End-to-end project delivery metrics

|

As of now, end-to-end delivery metrics are not available in the UI, only as metrics. You’ll need to use tools like Grafana or Kibana to show them on charts. |

The end-to-end delivery metrics are performance metrics for the promotions across several projects, following the links between the builds.

For example:

-

given a project P depending on a component C which itself depends on a library L

-

given a promotion GOLD valid on all these components

We can measure the performance of this promotion by following the links from L to C to P.

The metric axes exposed at the beginning translate into:

-

lead time - how long does it take from the moment a L build is created to the moment it is available in C and P, with all builds are all levels being GOLD?

-

frequency - how often does it happen that for each L build, all linked L, C and P builds will all be GOLD?

-

success rate - for each L build, how many of them are GOLD and also their linked C and P builds?

-

time to restore - if a chain L → C → P is not GOLD, how long does it take to restore it to full GOLD?

All these metrics are exported for each transitive link, branch & promotion. In our previous example, we’ll have records for the pairs:

-

L:L - same as single project delivery metric

-

L:C, C:P - one level of dependency

-

L:P - two levels of dependency

The following metrics are available:

-

ontrack_dm_promotion_lead_time- in seconds - for the lead time -

ontrack_dm_promotion_success_rate- percentage (from 0.0 to 1.0) - success rate - can be used for the frequency by counting the occurences -

ontrack_dm_promotion_ttr- in seconds - for the time to restore

Each metric is associated with the following tags:

-

sourceProject, sourceBranch

-

targetProject, targetBranch

-

promotion

8.6. Auto versioning on promotion

Beside collecting data about the performance of your delivery, Ontrack can in turn use this information to drive other automation processes.

One of these processes that Ontrack can drive is the "auto promotion on promotion", which allows the propagation of versions from one repository to others using quality gates based on Ontrack promotions.

Let’s imagine a project parent which has a dependency on a module expressed through a version property somewhere in a file.

Ideally, whenever the module has a new version is a given range, we want this version to be used automatically by the parent.

Manually, we can do this of course:

-

we update the version in the

parent -

we perform any needed post-processing like a resolution of locks

-

we commit and push the change. Voilà.

If we put extra automation in the mix, you can define a perfectly valid auto versioning process.

This becomes more complex whenever having a new version of the module is not enough of a criteria to have it used.

This may be a release which has not been qualified yet by extra quality processes (long running ones maybe).

That’s where the concept of promotion in Ontrack can play a very important rule:

-

the

moduleis promoted -

this starts the following process:

-

Ontrack creates a pull request for the

parentwhere the version of themodulehas been changed to the one being promoted -

any required post processing is performed on this PR

-

when the PR is ready to be merged (with all its controls), it’s merged automatically

Result:

-

versions are propagated automatically only when "promotion gates" are opened

This is valid from one module to a project, and can be easily extended to a full tree of dependent modules.

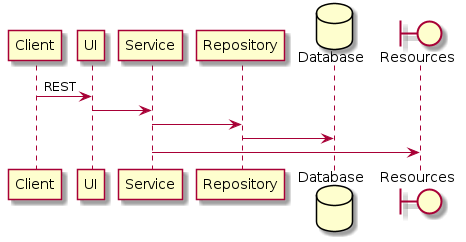

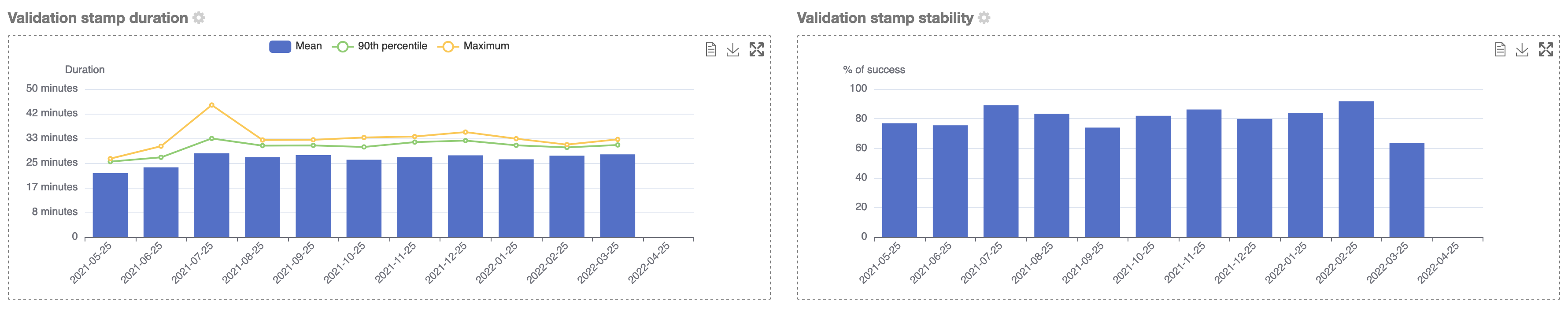

The diagram below shows how this works:

8.6.1. When not to use auto versioning

While auto versioning is pretty easy to put in place, it should not be used where traditional dependency management based on locks can be used instead for simple code libraries.

Auto versioning on promotion is however particularly well suited to deal with situations like:

-

modular monoliths

-

GitOps repositories with fixed versions

8.6.2. General configuration

Auto versioning is not enabled by default. This can be done in the Settings > Auto Versioning.

Three parameters are available:

-

Enabled - check to enable auto versioning

-

Audit retention - maximum number of seconds to keep non-running audit entries for auto versioning requests (see Audit logs for more information)

-

Audit cleanup - maximum number of seconds to keep audit entries for auto versioning requests. This time is counted after the retention period for the non-running entries (see Audit logs for more information)

|

These settings can also be configured as code. For example using: |

Queue configuration

Ontrack uses queues in RabbitMQ to schedule and process auto versioning events.

By default, one and only one queue, called auto-versioning.default.1 is available.

When the load becomes too important, you can use two mechanisms to scale the auto versioning:

-

increase the number of default queues. You can set the

ontrack.extension.auto-versioning.queue.scaleconfiguration property to a higher number than 1 -

create dedicated queues for some projects, see below.

Dedicated queues

For a given Ontrack project, you can setup a dedicated queue, which will be used exclusively for this project (whereas the default queues are shared between all projects).

Use the ontrack.extension.auto-versioning.queue.projects configuration property to defined a comma-separated list of projects which must have dedicated queues.

For example, using environment variables:

ONTRACK_EXTENSION_AUTO_VERSIONING_QUEUE_PROJECTS=project-one,project-two8.6.3. Branch configuration

The configuration of a branch for the auto versioning of its dependencies can be done using:

-

the GraphQL

setAutoVersioningConfigorsetAutoVersioningConfigByNamemutation -

Jenkins Ontrack pipeline library for Jenkins pipelines

-

GitHub for the GitHub ingestion

All these integrations rely on setting up a version of the auto versioning model for a branch which contains a list of auto versioning source configurations. This can be represented as YAML using:

# List of configurations

configurations:

# Project to watch

- sourceProject: String

# Name of the branch to take into account for the dependency. Several branches can be selected using

# a regular expression. If several branches are eligible, only the latest version

# can be used, based on inverted order of semantic versioning. Branches which

# do not comply with semantic versioning are discarded.

#

# See <Targeting a series of branches> for more information.

#

# Alternatively, the sourceBranch parameter can be set to "&<expression>" where `<expression>` is

# used to detect the valid source branch from the source project.

#

# See <Branch expressions> below for more information.

sourceBranch: String

# Promotion to watch

sourcePromotion: String

# Comma-separated list of file to update with the new version

targetPath: String

# Regex to use in the target file to identify the line to replace with the new version.

# It must have a capturing group in position 1, which will be replaced by the actual version.

# For example:

# `coreVersion = (.*)`

targetRegex: String?

# Can be used instead of the `regex` when we consider

# property files. In the sample above, the target property can be set to `coreVersion`

targetProperty: String?

# When `property` is used, `propertyRegex` can define a regular expression to extract / update

# the actual version from/into the property value. The regular expression must contain at least

# one capturing group, holding the actual version value. This `propertyRegex` is useful for cases

# when the version is part of a bigger string, for example, for a Docker image qualified name.

# Example:

# When targetProperty = "repository/image:tag"

# to target tag, you can use targetPropertyRegex: "repository\/image\:(.*)"

targetPropertyRegex: String

# when `property` is set, defines how the target file

# must be handled. For example, it could be a dependency notation in a NPM `package.json` file, or

# a property entry in Java properties file for Gradle. For NPM, use `npm`. For Java properties,

# use `properties`. When not specified, it defaults to `properties`. Other types are available,

# see <Target files types>

targetPropertyType: String?

# Check if the PR must be approved automatically or not (`true` by default)

autoApproval: Boolean?,

# Prefix to use for the upgrade branch in Git, defaults to `feature/auto-upgrade-<project>-<version>-<branch>`.

# If set manually, the `<project>` and `<version>` tokens can be used to be replaced respectively

# by the dependency project (the `project` above) and the actual version.

#

# The `<branch>` token is replaced by the MD5 digest of the target branch.

#

# Only the `<version>` token is required.

#

# Starting from 4.7.30 & 4.8.14, the `<branch>` token is not required but will be added (with the `-<branch>` suffix) if not present.

upgradeBranchPattern: String?

# Type of post-processing to launch after the version has been updated

postProcessing: String?

# Configuration of the post processing

postProcessingConfig: JsonNode?

# See "Auto versioning checks"

validationStamp: String?

# Auto approval mode

autoApprovalMode: CLIENT | SCM

# Build link creation when running the checks. True by default.

buildLinkCreation: Boolean?

# Qualifier to use for the build links

qualifier: String?

# How must the version to use be computed from the source build?

# See "Version source" below

versionSource: String?

# Additional paths to change.

# See "Additional paths" below

additionalPaths:

- # Comma-separated list of file to update with the new version

path: String

# Regex to use in the target file to identify the line to replace with the new version.

# The first matching group must be the version.")

regex: String?

# Optional replacement for the regex, using only a property name

property: String?

# Optional regex to use on the property value

propertyRegex: String?

# When property is defined, defines the type of property (defaults to Java properties

# file, but could be NPM, etc.)

propertyType: String?

# Source of the version for the build. By default, uses the build label is the source project

# is configured so, or the build name itself. This allows the customization of this behavior.

versionSource: String?|

The auto versioning model for a branch, if set, is shown on the branch page. |

Targeting a series of branches

In this scenario, the parent wants to be notified of a promotion on a series of branches, and Ontrack triggers the upgrade only if the promotion has occurred on the latest branch.

Setup:

-

set the

branchparameter to a regular expression on the Git branch, for example:release\/.\..*

How does it work?

-

when a promotion occurs on the desired level, Ontrack gets the list of branches for the dependency, orders them by descending version, filter them using the regular version, and triggers an upgrade only if the promoted branch is the first in this list (latest in terms of version)

Pro’s:

-

simple

-

allows auto upgrades fairly easily

Con’s:

-

the dependency must really take care of a strong semantic versioning

Branch expressions

The sourceBranch parameter can be set to &<expression> where <expression> is an expression used to detect the source branch on the source project for a branch eligible for auto versioning.

Supported values are:

®ex

By using:

sourceBranch: "®ex:<regex>"this is equivalent to the default behaviour:

sourceBranch: "<regex>"&same

The source branch must have the exact same name as the target branch.

Example: if you have a branch release-1.24 on a parent project P and you want to get updates from a dependency project only for the same branch, release-1.24, you can use:

sourceBranch: "&same"&most-recent

Two branches (release/1.1 & release/1.2) are available for a project which is dependency of an auto versioned parent project with the following default branch source:

branch: 'release\/1\..*'In this scenario, no promotion has been granted yet in release 1.2 of the dependency.

When 1.1 is promoted, Ontrack identifies a branch on the parent project to be a potential candidate for auto versioning.

This branch is configured to accept only the latest release/1.* branch, which is - now - the release/1.2.

Therefore, a 1.1 promotion is no longer eligible as soon as the 1.2 branch was created (and registered in Ontrack).

What exactly do we want to achieve? In this scenario, we always want the version promoted in 1.1 as long as there is none in 1.2. Let’s imagine we promote a 1.1 while 1.2 was already promoted, what then? How do we protect ourselves?

The idea is to accept a promotion as long as there is no such a promotion in later branches.

-

a 1.1 is promoted and there is no such promotion in more recent branches (1.2, etc.) - we accept it

-

a 1.1 is promoted and there is already such a promotion in a more recent branch (1.2 for example) - we reject it

To implement this strategy, we have to use:

branch: '&most-recent:release\/1\..*'&same-release

On the same model as the "&same" sourceBranch parameter, there is the possibility to get a "&same-release" branch source.

This is to be used in cases where the dependency and its parent follow the same branch policy at release/ branch level, but only for a limited number of levels.

For example, a parent has release branches like release/1.24.10, with a dependency using on release/1.24.15. We want release/1.x.y to always depend on the latest release/1.x.z branch (using 1. as a common prefix).

One way to do this is to use: sourceBranch: "release/1.24.*" but this would force you to always update the source branch parameter for every branch:

-

release/1.24.* in release/1.24.x branch

-

release/1.25.* in release/1.25.x branch

-

etc.

A better way is to use, in this scenario:

sourceBranch: "&same-release:2"This means:

-

if you’re on a release/x.y.z branch, use release/x.y.* for the latest branch

-

for any other branch (main) for example, we use the same branch

|

Note that |

Version source

By default, the version to use in the target project is computed directly from the build which has been promoted.

The default behavior is:

-

if the source project is configured to use the labels for the builds ("Build name display" property), the label (or release, or version) of the build is used. If this label is not present, the auto versioning request will be rejected

-

if the source project is not configured, the build name is taken as the version

This version computation can be adapted using the versionSource configuration parameter.

The different options for this parameter are:

-

default- uses the default behavior described above -

name- uses the name of the build, regardless of the source project configuration -

labelOnly- uses the label attached to the build, regardless of the source project configuration. If there is no label, the auto versioning request is rejected -

metaInfo/<category>/<name>ormetaInfo/<name>- the version is the value of a meta information item of the request category (optional) or name. If so such meta information is found, the auto versioning request is rejected.

Additional paths

The additionalPaths configuration property allows the specification of additional paths to update

instead of just the main one.

|

This can somehow be considered as a form of post-processing but without the need to call an external service. |

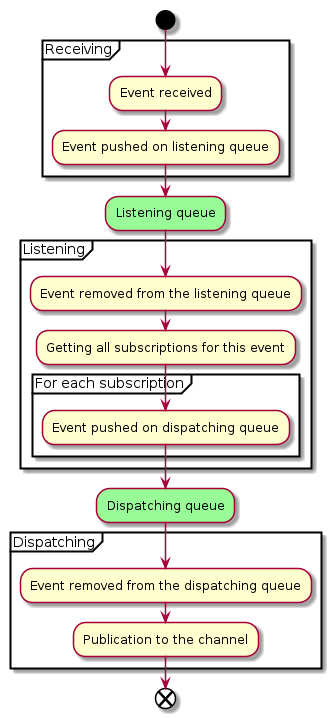

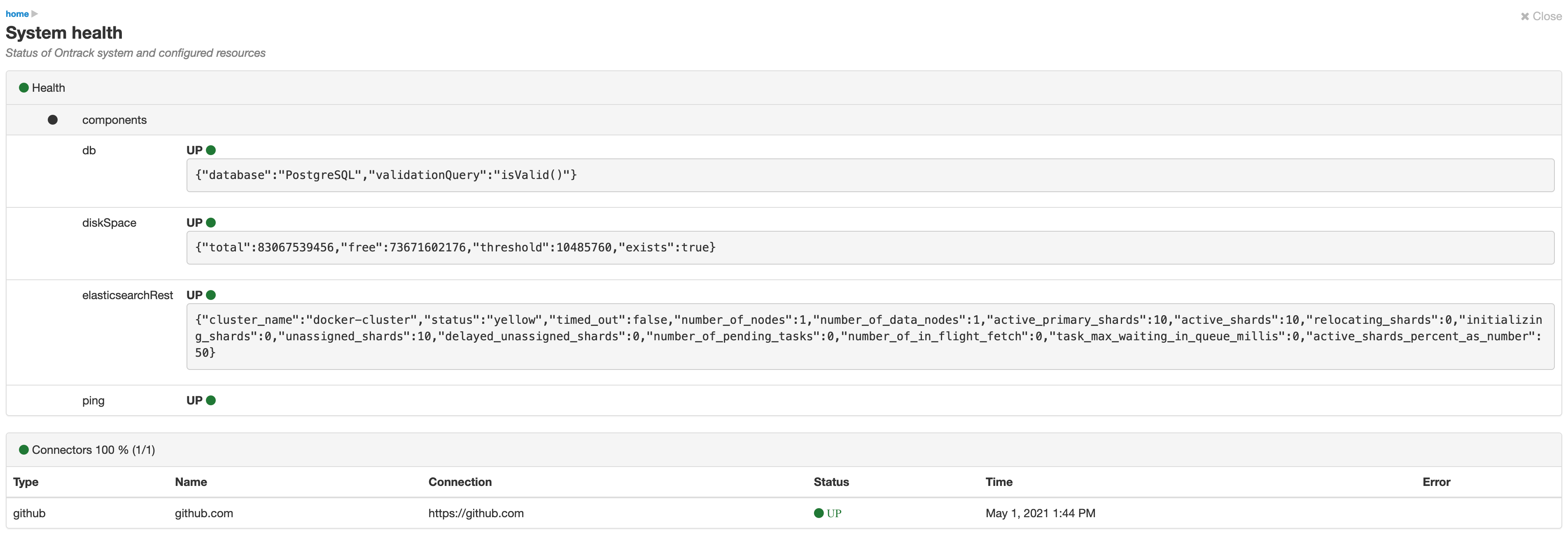

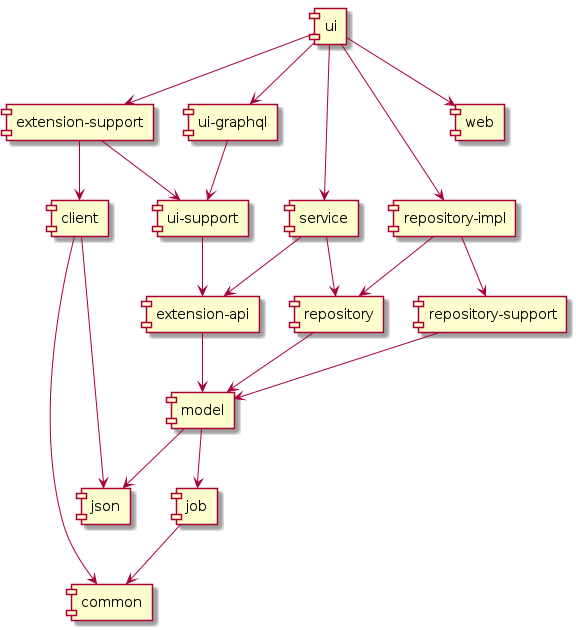

Example: